28th ACM User Interface Software and Technology Symposium Charlotte, NC

November 8-11, 2015

Watch the presentation on Youtube HERE

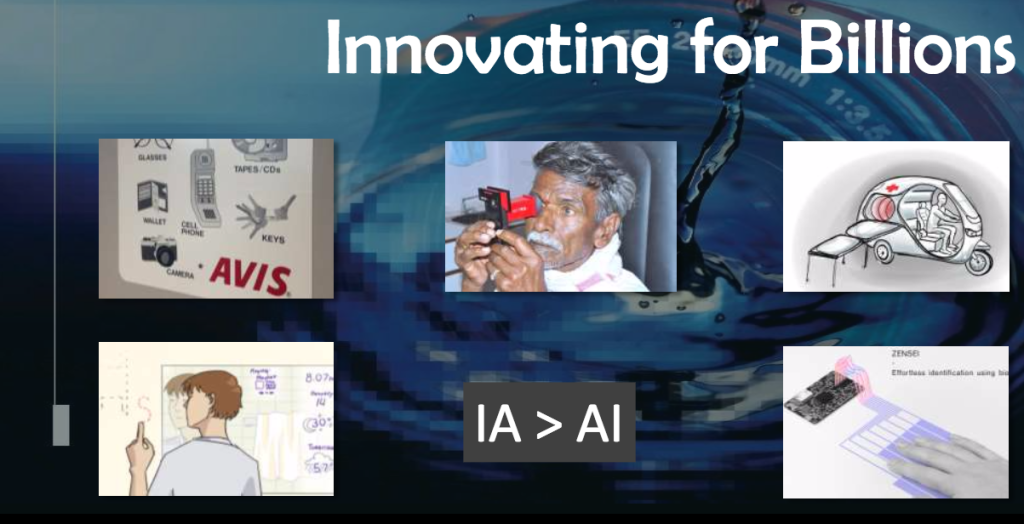

Keynote Speaker, Ramesh Raskar: We live in a very confusing world. The largest taxi service company doesn’t own any taxis. And the largest hospitality company in terms of market cap doesn’t own any hotels. The largest media company doesn’t own any media. And as we think about the next billion people who are becoming digital, their solutions will also be very unique. It’s exciting to see how this community can think about solving problems for the next billion people who are looking for not just solutions in white collar jobs or offices, but in many areas: in health, in education, in transport, in agriculture, in food, in hospitality, and even for daily wages.

A lot of these areas were considered kind of separated from the computing world, because we didn’t have a digital interface. And some of you might think that the upcoming solutions are just a bunch of apps. But, instead of apps, let’s think about DAPS, Digital Applications for Physical Services. Or DOPS, if you want to make it broader. Digital Opportunities for Physical Services. With DOPS and DAPS we have an opportunity to impact the physical world in areas where we simply couldn’t before.

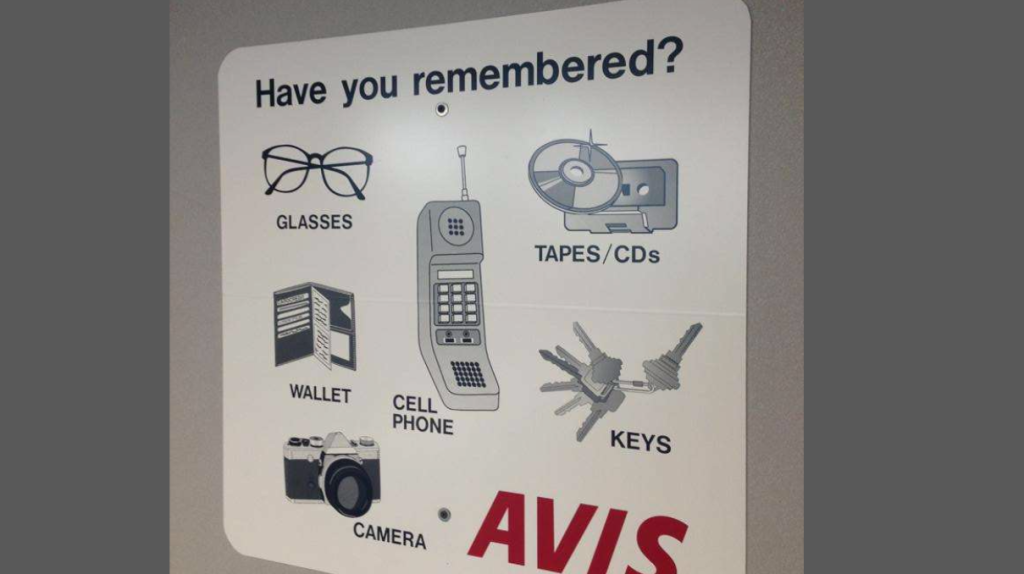

This newly digital lifestyle that we all have is creating some hilarious situations. I was just dropping off my rental car and I noticed this sign at Avis counter. At the top right it says, “Have you forgotten your tapes and CDs?” When is the last time you carried tapes and CDs? I chuckled and then I started looking at other parts of this poster and I asked, “What are some other things that could disappear over the next few years?” What is next?”

Male 2: Camera.

Ramesh: The camera has almost disappeared, right.

Male 3: The wallet.

Ramesh: The wallet is almost gone. Keys too. There is nothing special about keys, we should be able to eclipse that very soon. What about the glasses? We could put a prescription currently into our displays. But maybe the cellphone itself will disappear. With variables and invariables and digestibles. So, when we look at these solutions, I think it’s clear that our interface to the digital world is going to be unique.

To start let’s talk about the eye conditions.

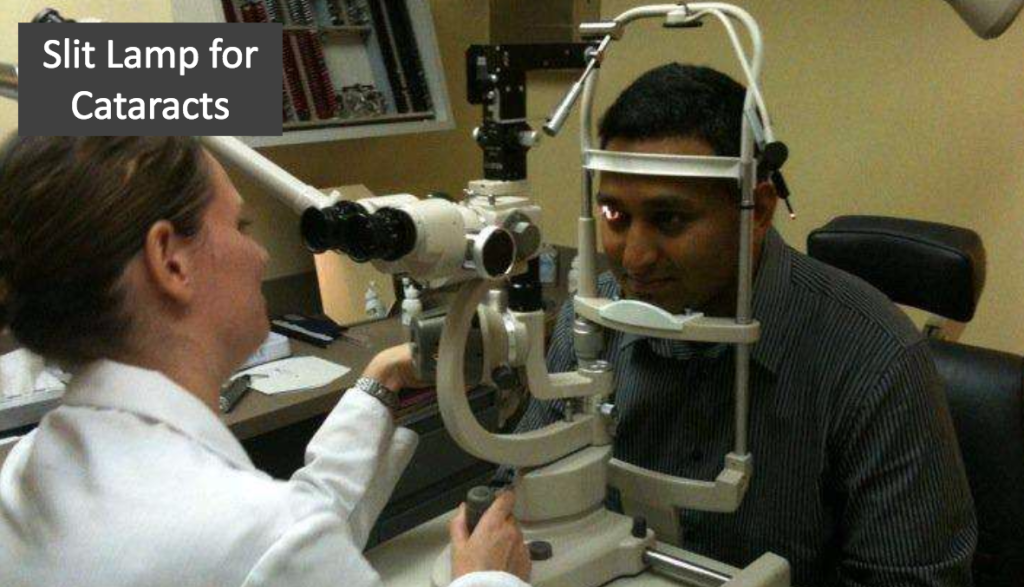

Here I am, getting an eye test, for cataracts on a device that really hasn’t changed for the last 30 years. The interface is the same. To get a retinal scan, to look at the back of your eye, the device costs quarter million dollars. Let’s look at the user interface. The nurse is going to shove my head towards the device, hopefully hard enough so that she gets a good picture. But the best part is, if she takes a picture and it is not good enough, do you know what she does next? She uses my head as her mouse.

To get a prescription for eyeglasses, it’s kind of funny how horrible the interfaces are. At the same time, there are billions out there who need services, but don’t get them because of lack of good interfaces. So it’s clear that when we talk about interception of these emerging interfaces and emerging worlds, we cannot think leniently.

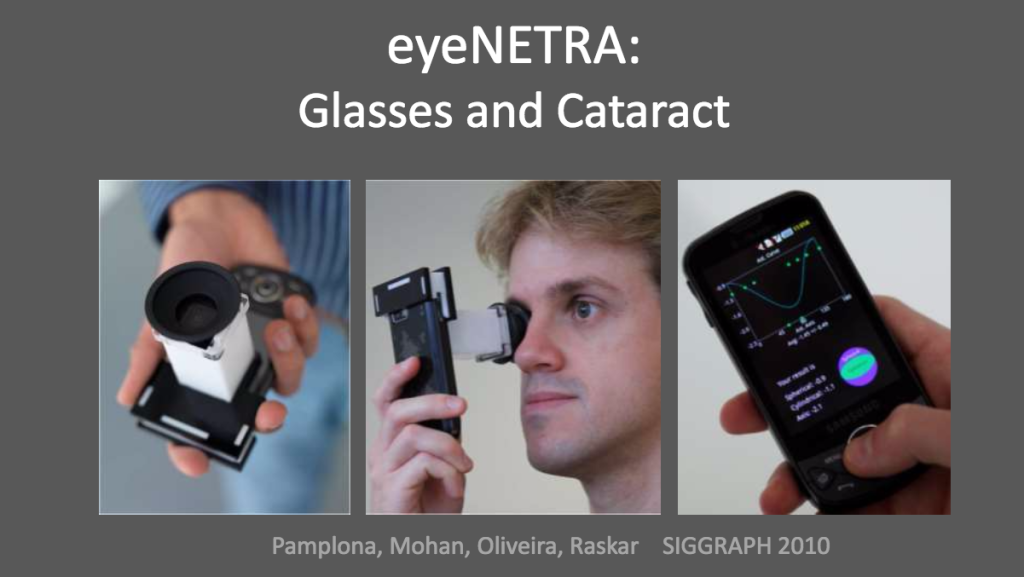

My group invented a device called EyeNetra about 5 years ago. It is a snap-on device, and it goes on top of a phone. You look through the device and click on a few buttons. When you’re done, you align some lines, and it gives you a prescription for your eyeglasses. It can scan for nearsightedness, farsightedness, and astigmatism. It can also scan for cataracts. Traditional solutions for this involve shining lasers into your eye and using extremely high quality and highly sensitive image sensors. We have something magical in our pocket when you have screens that have 300 plus dots per inch. They are actually being called retina displays.

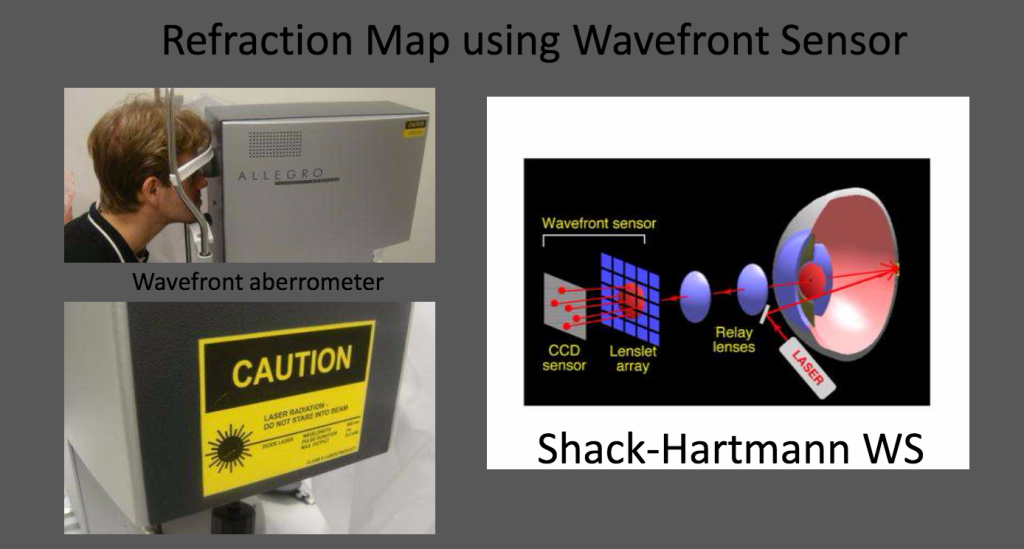

The quality is real enough that you can actually use the retina display to measure the eye. A traditional device shines a laser spot at the back of the eye. At the back of the eye, it clears its energy trend, and it has a micro area. If all of the rays coming out of the lens are parallel, because it’s exactly one focal length, you see this nice spot at the center of each of the micro lenses. This is what you see when you have a healthy eye. If you have nearsightedness, farsightedness, astigmatism, then those rays will not be parallel, and you’ll see small displacement on each of the micro lenses. So using this image sensor and the displacement of those dots, we see a change in wave front.

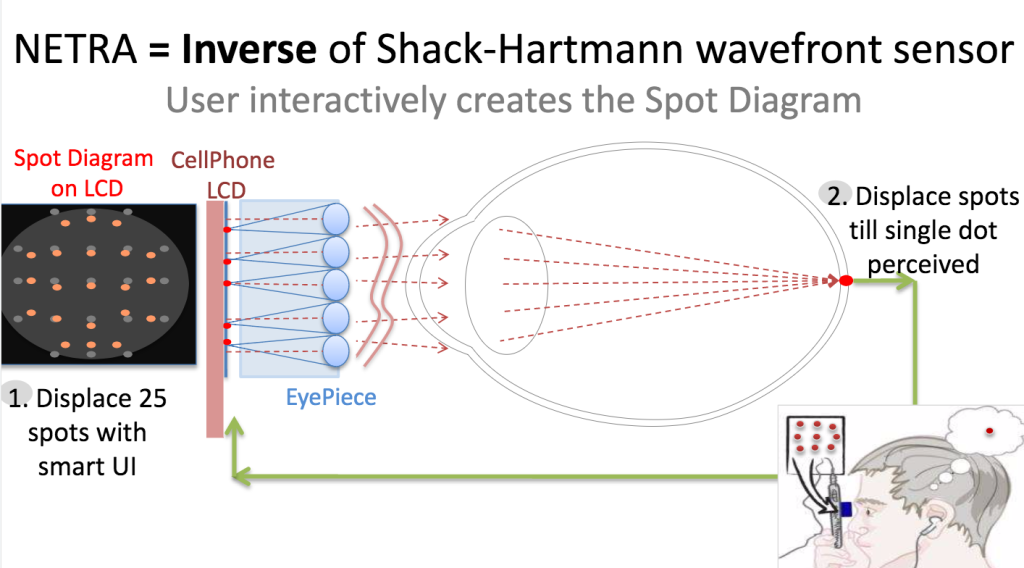

You can integrate the local slope to reference integration to color the aberration in your eye. This is a great solution which has been around for about 30 years. We ask: How can they replace lasers and sensors with human interfaces? Here’s an idea using a cellphone display. We use the same micro lenses, just the inverse of what you just saw. If you show the dots at the center of the smart lens and you have a normal eye, you’ll see a nice spot. So again, with this uniform area of dots, you perceive a single dot.

But if you have an aberrated eye, the same dots at the center shown at a uniform creed will actually create a jumble of dots. Right? So using the interface on the screen, you can simply displace those dots intentionally so that the rays are purely sorted, and then you get a single dot. This is a trick that you can use to display these 2 dots, which is 50 degrees of freedom to measure your reflective level. Now of course there’s going to be an annoying interface if you have to do 50 degrees of freedom to compute just 3 values: your spherical error, your cylindrical error, and your axis. So again, you can create a novel interface that allows you to navigate through this conventional space, but only quickly with each of those three parameters that you care about. So again, it’s turning a complicated optics and sensing problem into an interface problem. Right? The snap-on eyepiece costs next to nothing. It’s plastic lenses and some prisms. And all that’s included is an interface and a software.

This device acts as a thermometer for your eye. Children don’t go to school because they don’t see very well. There are 2 billion people worldwide who need to wear glasses but don’t wear them. There are labor workers who cannot do their job because they’re not able to function. So it’s just a problem of removing blurred vision. But it has huge socioeconomic draws. Sometimes you just want to watch your favorite athlete kick that goal in high definition.

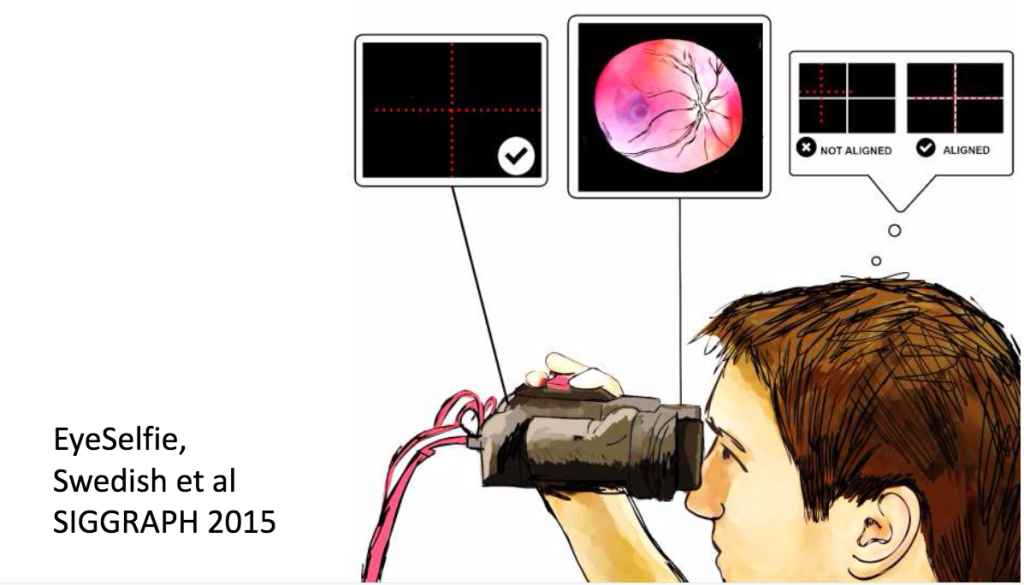

Now we’re looking at many clever solutions for interfaces. They can allow us to dramatically simplify entries of cost of complicated solutions. More recently, we started packing an even bigger challenge, which is taking a picture of the back of the eye. Remember the mouse? My head as a mouse? Well, what if we changed the problem? The biggest challenge in looking at the back of your eye– your retina– is not imaging, but it’s actually the alignment because of the corneal reflection from your cornea.

Our pupils are tiny, and alignment becomes very very challenging. Typically, this has been solved, as I said, by just making sure the eyepiece is well aligned with the instrument. So we turn the problem around in a word mostly on paper, and we create visual cues inside this device we call it the EyeSelfie, because in a selfie you know the picture is being taken because you can see yourself. So, instead of creating a camera that you could become an expert in aligning and taking a picture with all the feedback.

EyeSelfie is not just a 2-d image, it’s actually a 4-d image. As long as you’re aligned, if you can see the line pattern, the camera can see you. Using these mechanics, it will see the back of the eye. Taking these ideas of simplifying interfaces, I and my team had dozens of ideas for how we can we create solutions for the blind and solutions for the visually challenged.

I want to have superhuman vision. I have realized that if we do this one project at a time working with masters and PhD candidates at the MIT Media Lab, it could take us 15 to 20 years to get all those ideas out. Can we do something to speed up this timeline? At the MIT Media Lab, everyone has a lot of resources, and everyone is able to be very productive. But, the next 5 billion people that we want to help are not in Boston. We need to go to these people and understand what their challenges are, and what can be done for these communities.

We also have an opportunity to solve problems that we’re not even thinking about. To do this we launched an initiative called REDx, Rethinking, Engineering, Design, and eXecution. Through the REDx platform, we engage local innovators and source problems to work on. This way, the whole world becomes our lab.

REDx is not a competition or a hackathon or some kind of outreach program. It is highly creative exploration and research. We carefully consider what instruments to work on and we engage innovators from all over the world. We discuss our parameters, and we consider the constraints we need to handle. We learn a lot from these challenges, and then we work together to create solutions. REDx now looks beyond eye health. We consider other health problems as well, including oral health, sleeping disorders, ear infections, and hearing tests. These devices are built with simple technologies like electronics, computer vision, machine learning, signal processing. In the olden days the doctors were the experts, but we realize that there’s a limit to what a doctor can do. As these devices become smarter and smarter, it is amazing what they can do. The most underutilized source in a healthcare setting is the patient himself or herself. If we as subjects can use really complex technologies, why are we being treated like vegetables when we go and get health solutions. We are capable of using smart interfaces.

By using this one simple idea of empowering the end user, we can come up with many solutions moving forward. You know that as good as AI is, IA can beat AI. Intelligence Amplification can beat Artificial Intelligence. Using this philosophy, we have built a whole range of solutions. These include: new stethoscopes, new otoscopes, new ways to measure infections, new ways to look at oral health, new types of ECG and EMG solutions. All using basic devices that empower the end user.

Let’s talk about the emerging world, and let’s make sure that we are talking about the right things. I think some time ago, emerging worlds meant developing countries, but we want it to mean something more than that. I think some time ago our efforts focused on saving lives. You know, how can we distribute vaccines, how can we distribute mosquito nets. Then in the last 10 or 15 years, the position changed and companies started asking, “How can we exploit emerging worlds as emerging markets?”

We’re in a very unique world where there is business opportunity in newly digital citizens. We can truly co-innovate with partners on the ground. This is an important new model for us. Lets look at some projects from the Camera Culture Group where large problems that can be solved through technology and technical interface. How about a CAT scan machine that can fit in a rickshaw?

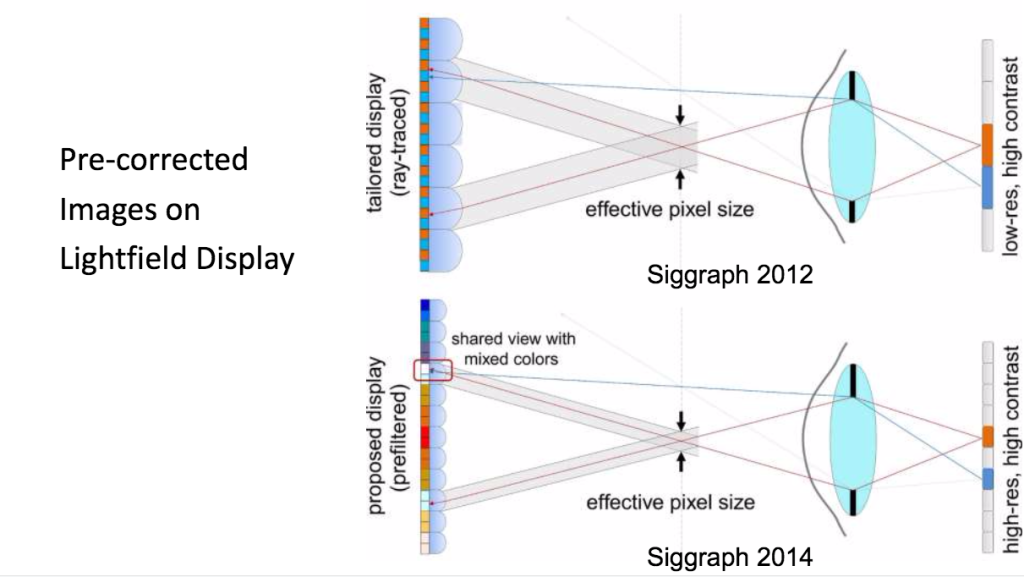

Here is another example: there are two billion people who need prescription glasses, but don’t wear them. How can we modify our screens so that your prescription can be dialed in to your displays? Once calibrated, it will appear blurry to everyone else, but to you it looks perfectly sharp. Schools and sharing professors would allow us to display high quality information. Again, we would convert a 2D display into a light film display and you would pre correct the 2D image. In 4D raised space, the inversion is actually very stable. So pre correcting the light frame, and displaying it for an individual with blurred vision is actually possible. Once you create a lightful display on the screen, which has to be sufficiently high resolution, you can dial in your prescription so the same display can be used for folks with varying individual prescriptions.

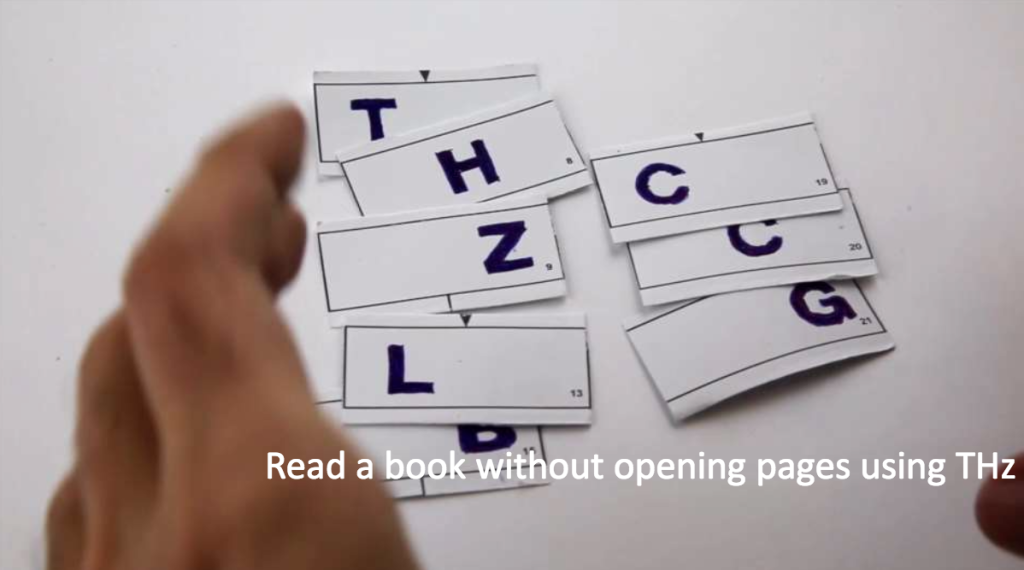

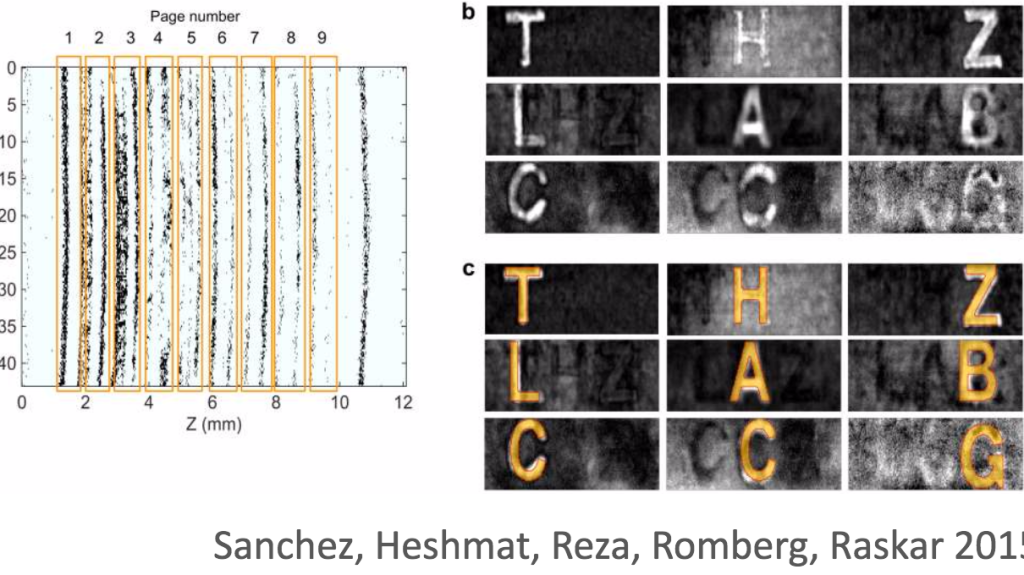

Now let’s consider reading a book without opening it, page by page. In emerging words, in ancient words, there are many archives: beautiful information that’s hidden away from us, because we simply cannot open the scrolls or books or artifacts. How would we tackle this problem? We can use a different electromagnet spectrum, in this case terahertz. Terahertz are at 300 microns to a millimeter in wavelength, but the pages of a book are about 20 micrometers thick. This is a huge discrepancy between the wavelength and the thickness of the pages. So, we’re going to create new techniques in the lab that allow us to see the images. The papers, the ink, and the air is nearly transparent in terms of terahertz. At the same time, the refractive index changes between the paper and the ink is what allows us to get enough reflections off of those pages. That difference is only about 4%, and we can start looking at these different texts on different pages and we can also deal with occlusions as we are looking at it from above. We could use a terahertz sensor to do interferometer measurements and compute raw data: the hypercube.

Then we reshape this hypercube and start looking at the signals. Terahertz are unique. They’re not like light; the images are not just intensity images. The fields are retro fields, and they might actually get inversions at the paper interface. Now we can see that we have nine pages. You can see the V-shaped hypercubes, and we can start the OCR, and also take care of occlusions by building the physical model for heat ray removal of interference from dot layers. So far, we can only look at about nine pages. We cannot read through a whole book, because we have to optimize the power, optimize the algorithms.

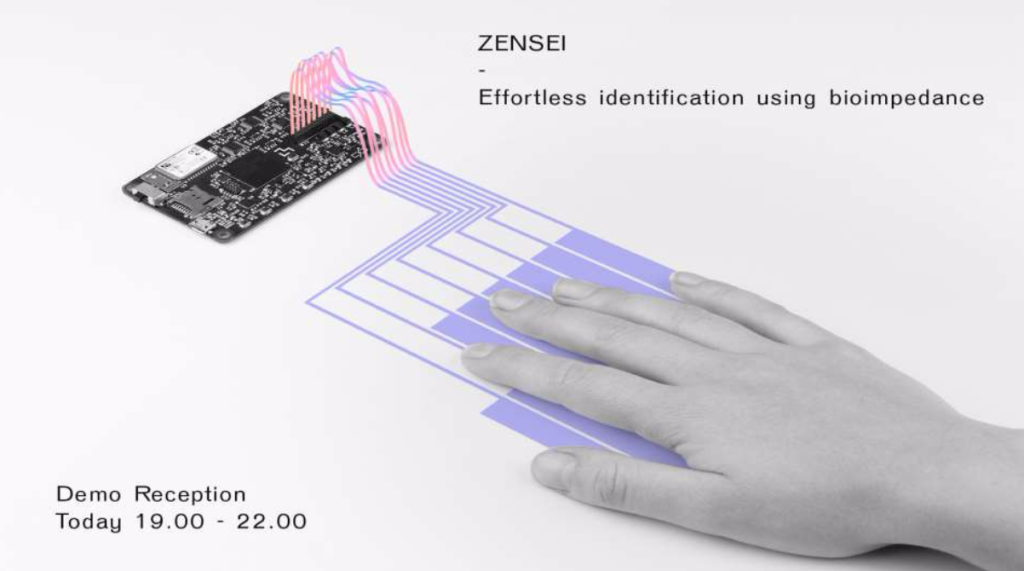

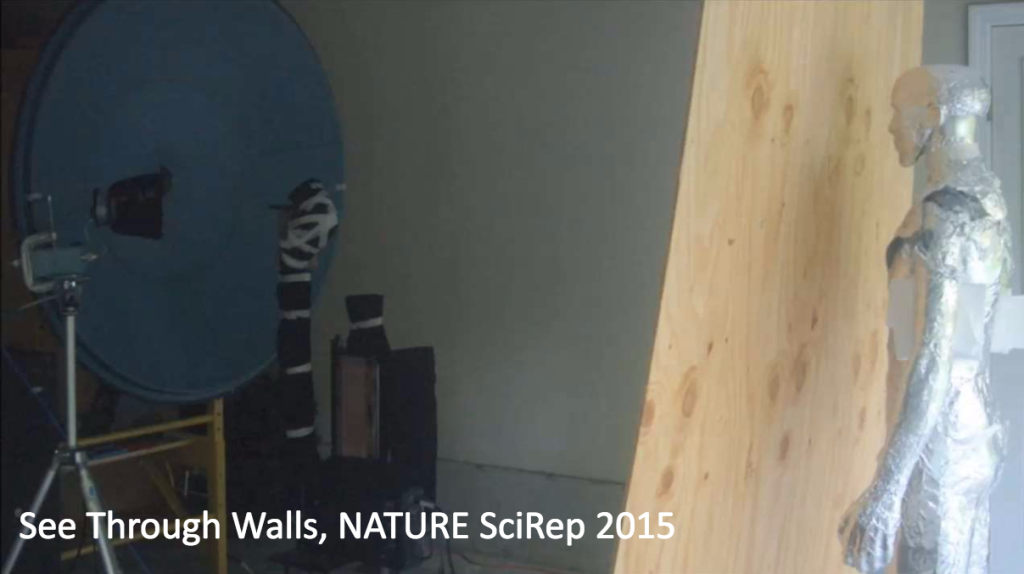

We can take these ideas beyond imaging, beyond the electromagnetic spectrum and into electrical interfaces. Imagine an interface that allows you to do an effortless identification, in this case through bioimpedance. So, without a password, without literacy to entering text, and without very cumbersome interfaces, you can identify a person. There’s a demo you’ll see in the evening at Google to show this. Again, we can look further and create interfaces to look at people in hazardous conditions, or in some cases, to allow you to see through walls. You can use other frequencies. Collaborators at MIT and also friends at UW have been playing with Ardiv for quite some time. Now we can use radio frequencies and effectively create a microwave camera that can create 3D dynamic images.

In this example we have a mannequin behind a wall. You can see timed images with picosecond resolution. We hope this will lead to new interfaces and to new opportunities in emerging worlds. And the other demo that my group is showing in the evening shows using radio frequency signals for through wall interfaces.

What are some other challenges in emerging worlds? Can you detect cancer by detecting circling tumor cells through your body? One method to do this uses fluorescence lifetime imaging. We create a physical interface that looks much like a blood pressure cuff and measure the fluorescence lifetime of tag cells, fluoroform tag cells. As we know, a benign tumor versus a malignant tumor can change the lifetime of the tag fluoroform, and we can measure that. Or can we create completely new interfaces for oral health that use your toothbrush as an input device, as a sensing device? Now, as I said earlier, we live in a world where we get digital services from companies who don’t own any of the physical assets.

Moving forward, we will get health solutions without hospitals. We will learn without schools. We will grow food without depending on farms. And we’ll transact in a non currency. So the digital opportunities for physical systems are huge. The way it has been solved by large organizations in the rich world was to develop the technology here and throw it over the wall in the emerging world, and see if it works. And that didn’t go very far. So some of them said “hey, let’s open labs, and let’s send X parts to Sao Paulo and Bangalore and Beijing.”

In his book, Geek Heresy, Kentaro Toyama describes that amplification where technology in most cases simply amplifies what’s already in the crowd. If things are getting better, they’re getting better faster. Where if things are not going well, the technology on its own is not going to change it. We need a third model– of a check out together model– which is thinking about solving those digital problems for physical systems. How can you work together with hundreds of innovators, inventors, and practitioners?

This is very complex. As a computing community, it also seems very daunting for us. We recently took a huge leap and said, “Let’s try to look at not just health solutions and certain imaging solutions, but a collection of problems that we can target in an integrated place.” And that’s the Kumbh Mela. For the Kumbh Mela in India, 30 million people show up for 30 days for a pilgrimage.

The festival has been going on for thousands of years, and there are always challenges. They have usually been usually tackled through large police and paramedics and huge top-down infrastructure. Here we have something interesting. We have to do challenges in food and health and housing and transactions and so on.

So over the last two years, our team at MIT, in collaboration many companies and foundations, has been making trips roughly once every three months to think about the Kumbh Mela and to find some interesting digital opportunities– to tackle these challenges in coordination with the local government, local universities, and local businesses. We look at sensing technologies to count the crowd. We’re looking at food distribution, food logistics, and various ways to steer the crowd using cellphone tower data. We are building pop-up housing. Food distribution that reaches networks of places you cannot reach. And it has been just fascinating. I encourage you to go to our website to learn more and everyone is welcome to come and participate in this effort. The next camp is in just about a couple of months,very close to Bombay at the end of January.

So when it comes to big data or internet of things and new abilities for citizens, it’s obvious that we cannot think leniently and scale solutions that we already know. We have to leapfrog and think about solutions that are well beyond the out-of-sight and further out of our mind. So with some of the collaborators at the Media Lab, we have started the REDx initiative, and we have centers popping up in many parts of the world. You may know some of these people. We work with Sandy Pentland, Kent Larson, Ethan Zuckerman, Cesar Hidalgo, and Iyad Rahwan. Also we have professor Ishi here from theMedia Lab, who constantly inspires me to do new things.

I think we have a very interesting milestone. If you believe the hype out there, you know, the number of users coming online, the number of users using new digital solutions. It’s mushrooming. We have our 30 million users every month coming online, who were not online before. So I hope that although we have made great progress in personal productivity or entertainment, we will see digitalized organizations come into play over the next 30 years. We will also look at additional professors to health, wealth, and well-being. And being a faculty, it was always about publish or perish. And then it became easy enough to. And the reason it became publish or perish was the only way to get your ideas out was through publishing: you submit your paper, your paper gets presented, and over the next five to ten years, somebody else will build on top of it, pick up your idea, and bring it in the real world.

But the paradigm changed, and we started saying “demo or die”. Because actually, it’s easy enough to prototype, because you build things and show it, not just write a paper about it. But let’s go one step beyond that. The same way that a few years ago it became easy to demo live, it has become easier and easier to deploy. Through hardware technologies, through software platforms, through cloud architectures, through worldwide networks.

I hope, over the next 30 years, we’ll have deploy sessions too and follow the mantra “deploy or do”. Okay, how many of you remember this fantastic use of pencils? You know, for the most useful implementation of the pencil as an interface to music. And so, we never know, you know, the things you build in this community can go out there as interface, and how the interface will be used out there. Now, we took a picture of this woman outside Hyderabad in India. She has converted a technology, which is a weighing scale into her business. But she’s very proud of that. Look at the entrance to her business. She has a mat. She’s very proud of it. So we never know through these micropreneurs and entrepreneurs how solutions and technologies and personal solutions we build in this community will have an impact out there in the world. And we’re here to keep trying.

So to conclude, The world is our lab. There are billions of citizens who are hungry for new interfaces to physical solutions. There are industries and organizations that need complete openness in the emerging world. By co-innovating with partners on the cloud. There are dozens and dozens of completely new search directions, and of course hundreds of new thesis topics. The world is our lab, so let’s solve the billion dollar problems that will touch a billion lives. Thank you.