Femto-Photography: Visualizing Photons in Motion at a

Trillion Frames Per Second

|

|

|

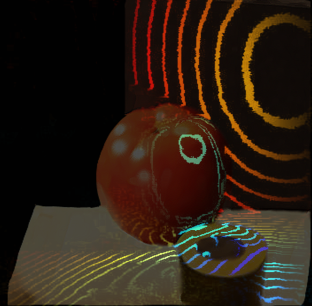

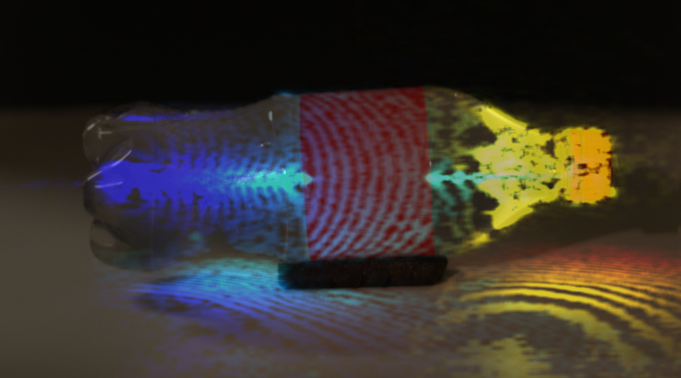

| Light in Motion: Combination of modern imaging hardware and a reconstruction technique to visualize light propagation via repeated periodic sampling. | Ripples of Waves: A time-lapse visualization of the spherical fronts of advancing light reflected by surfaces in the scene. | |

|

||

| Time-Lapse Visualization: Color coding of light with a delay of few picoseconds in each period. |

Video by Nature.com

|

Team

Ramesh Raskar, Associate Professor, MIT Media Lab; Project Director (raskar(at)mit.edu)

Moungi G. Bawendi, Professor, Dept of Chemistry, MIT

Andreas Velten, Postdoctoral Associate, MIT Media Lab (velten(at)mit.edu)

Everett Lawson, MIT Media Lab

Amy Fritz, MIT Media Lab

Di Wu, MIT Media Lab and Tsinghua U.

Matt O’toole, MIT Media Lab and U. of Toronto

Diego Gutierrez, Universidad de Zaragoza

Belen Masia, MIT Media Lab and Universidad de Zaragoza

Elisa Amoros, Universidad de Zaragoza

Femto-Photography Members

Nikhil Naik, Otkrist Gupta, Andy Bardagjy, MIT Media Lab

Ashok Veeraraghavan, Rice U.

Thomas Willwacher, Harvard U.

Kavita Bala, Shuang Zhao, Cornell U.

Abstract

We have built an imaging solution that allows us to visualize propagation of light. The effective exposure time of each frame is two trillionths of a second and the resultant visualization depicts the movement of light at roughly half a trillion frames per second. Direct recording of reflected or scattered light at such a frame rate with sufficient brightness is nearly impossible. We use an indirect ‘stroboscopic’ method that records millions of repeated measurements by careful scanning in time and viewpoints. Then we rearrange the data to create a ‘movie’ of a nanosecond long event.

The device has been developed by the MIT Media Lab’s Camera Culture group in collaboration with Bawendi Lab in the Department of Chemistry at MIT. A laser pulse that lasts less than one trillionth of a second is used as a flash and the light returning from the scene is collected by a camera at a rate equivalent to roughly half a trillion frames per second. However, due to very short exposure times (roughly two trillionth of a second) and a narrow field of view of the camera, the video is captured over several minutes by repeated and periodic sampling.

The new technique, which we call Femto Photography, consists of femtosecond laser illumination, picosecond-accurate detectors and mathematical reconstruction techniques. Our light source is a Titanium Sapphire laser that emits pulses at regular intervals every ~13 nanoseconds. These pulses illuminate the scene, and also trigger our picosecond accurate streak tube which captures the light returned from the scene. The streak camera has a reasonable field of view in horizontal direction but very narrow (roughly equivalent to one scan line) in vertical dimension. At every recording, we can only record a ‘1D movie’ of this narrow field of view. In the movie, we record roughly 480 frames and each frame has a roughly 1.71 picosecond exposure time. Through a system of mirrors, we orient the view of the camera towards different parts of the object and capture a movie for each view. We maintain a fixed delay between the laser pulse and our movie starttime. Finally, our algorithm uses this captured data to compose a single 2D movie of roughly 480 frames each with an effective exposure time of 1.71 picoseconds.

Beyond the potential in artistic and educational visualization, applications include industrial imaging to analyze faults and material properties, scientific imaging for understanding ultrafast processes and medical imaging to reconstruct sub-surface elements, i.e., ‘ultrasound with light’. In addition, the photon path analysis will allow new forms of computational photography, e.g., to render and re-light photos using computer graphics techniques.

Download high-resolution photos and videos

|

||

| Ripples over surfaces: The advancing spherical front intersects the surfaces of table, the shape of the fruit and the back wall. | Painting a Photo in Time: We can watch the progressive synthesis of a photograph. By color coding all video frames and summing them creates a single ‘rainbow’ of wavefronts. | |

|

||

|

||

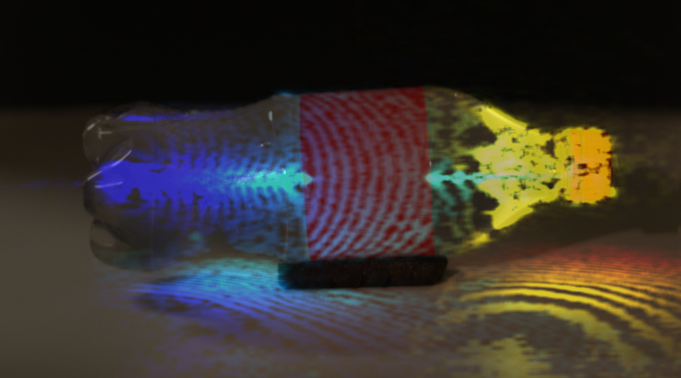

| Volumetric Propagation: The pulse of light is less than a millimeter long. Between each frame, the pulse travels less than half a millimeter. Light travels a foot in a nanosecond and the duration of travel through a one foot long bottle is barely one nanosecond (one billionth of a second). | Bullet of Light: The slow-motion playback creates an illusion of a group of photons traveling thru the bottle. | |

References

A. Velten, R. Raskar, and M. Bawendi, “Picosecond Camera for Time-of-Flight Imaging,” in Imaging Systems Applications, OSA Technical Digest (CD) (Optical Society of America, 2011) [Link]

“Slow art with a trillion frames per second camera”, A Velten, E Lawson, A Bardagiy, M Bawendi, R Raskar, Siggraph 2011 Talk [Link]

R Raskar and J Davis, “5d time-light transport matrix: What can we reason about scene properties”, July 2007

Frequently Asked Questions

How can one take a photo of photons in motion at a trillion frames per second?

We use a pico-second accurate detector. We use a special imager called a streak tube that behaves like an oscilloscope with corresponding trigger and deflection of beams. A light pulse enters the instrument through a narrow slit along one direction. It is then deflected in the perpendicular direction so that photons that arrive first hit the detector at a different position compared to photons that arrive later. The resulting image forms a “streak” of light. Streak tubes are often used in chemistry or biology to observe milimeter sized objects but rarely for free space imaging.

Can you capture any event at this frame rate? What are the limitations?

We can NOT capture arbitrary events at picosecond time resolution. If the event is not repeatable, the required signal-to-noise ratio (SNR) will make it nearly impossible to capture the event. We exploit the simple fact that the photons statistically will trace the same path in repeated pulsed illuminations. By carefully synchronizing the pulsed illumination with the capture of reflected light, we record the same pixel at the same exact relative time slot millions of times to accumulate sufficient signal. Our time resolution is 1.71 picosecond and hence any activity spanning smaller than 0.5mm in size will be difficult to record.

How does this compare with capturing videos of bullets in motion?

About 50 years ago, Doc Edgerton created stunning images of fast-moving objects such as bullets. We follow in his footsteps. Beyond the scientific exploration, our videos could inspire artistic and educational visualizations. The key technology back then was the use of a very short duration flash to ‘freeze’ the motion. Light travels about a million times faster than bullet. To observe photons (light particles) in motion requires a very different approach. The bullet is recorded in a single shot, i.e., there is no need to fire a sequence of bullets. But to observe photons, we need to send the pulse (bullet of light) millions of times into the scene.

What is new about the Femto-photography approach?

Modern imaging technology captures and analyzes real world scenes using 2D camera images. These images correspond to steady state light transport and ignore the delay in propagation of light through the scene. Each ray of light takes a distinct path through the scene which contains a plethora of information which is lost when all the light rays are summed up at the traditional camera pixel. Light travels very fast (~1 foot in 1 nanosecond) and sampling light at these time scales is well beyond the reach of conventional sensors (fast video cameras have microsecond exposures). On the other hand, LiDAR and Femtosecond imaging techniques such as optical coherence tomography which do employ ultra-fast sensing and laser illumination capture only the direct light (ballistic photons) coming from the scene, but ignore the indirectly reflected light. We combine the recent advances in ultra-fast hardware and illumination with a reconstruction technique that reveals unusual information.

What are the challenges?

Fastest electronic sensors have exposure time in nanoseconds or hundreds of picoseconds. To capture propagation of light in a tabletop scene we need sensor speeds of about 1 ps or one trillion frames per second. To achieve this speed we use a streak tube. The streak camera uses a trick to capture a one dimensional field of view at close to one trillion frames per second in a single streak image. To obtain a complete movie of the scene we stitch together many of these streak images. The resulting movie is not of one pulse, but is an average of many pulses. By carefully synchronizing the laser and camera we have to make sure each of those pulses look the same.

How will these complicated instruments transition out of the lab?

The ultrafast imaging devices today are quite bulky. The laser sources and high-speed cameras fit on a small optical bench and need to be carefully calibrated for triggering. However, there is parallel research in femtosecond solid-state lasers and they will greatly simplify the illumination source. In addition, progress in optical communication and optical computing shows great promise for compact and fast optical sensors. Nevertheless, in the short run, we are building applications where portability is not as critical.

Related Work

P Sen, B Chen, G Garg, S Marschner, M Horowitz, M Levoy, and H Lensch, “Dual photography”, in ACM SIG. ’05

S M Seitz, Y Matsushita, and K N Kutulakos, “A theory of inverse light transport”, in ICCV ’05

S K Nayar, G Krishnan, M Grossberg, and R Raskar, “Fast separation of direct and global components of a scene using high frequency illumination”, in SIGGRAPH ’06

K Kutulakos and E Steger, “A theory of refractive and specular 3d shape by light-path triangulation”, IJCV ’07.

B. Atcheson, I. Ihrke, W. Heidrich, A. Tevs, D. Bradley, M. Magnor, H.-P. Seidel, “Time-resolved 3D Capture of Non-stationary Gas Flows” Siggraph Asia, 2008

Presentation, Videos and News Stories

News Coverage:

The New York Times: Speed of Light Lingers in Face of New Camera

MIT News: Trillion-frame-per-second video, By using optical equipment in a totally unexpected way, MIT researchers have created an imaging system that makes light look slow.

BBC: MIT’s Light Tracking Camera

Melanie Gonick, MIT News

Download high-resolution photos and videos

related project: CORNAR, Looking Around Corners

Acknowledgements

We thank the entire Camera Culture group for their unrelenting support.

This research is supported by research grants from MIT Media Lab sponsors, MIT Lincoln Labs and the Army Research Office through the Institute for Soldier Nanotechnologies at MIT. Ramesh Raskar is supported by an Alfred P. Sloan Research Fellowship 2009 and DARPA Young Faculty award 2010.