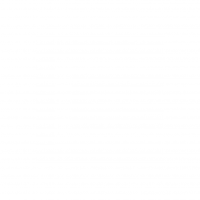

Using short laser pulses and a fast detector, we built a device that can look around corners with no imaging device in the line of sight using scattered light and time resolved imaging.

|

|

|

| The seemingly impossible task of recording what is beyond the line of sight is possible due to ultra‐fast imaging. A new form of photography, Femto-photography exploits the finite speed of light and analyzes ‘echoes of light’.(Illustration:Tiago Allen) | Video by Nature.com Nature.com/news |

|

Team

Ramesh Raskar, Associate Professor, MIT Media Lab; Project Director (raskar(at)mit.edu)

Moungi G. Bawendi, Professor, Dept of Chemistry, MIT

Andreas Velten, Postdoctoral Associate, MIT Media Lab; Lead Author (velten(at)mit.edu)

Christopher Barsi, Postdoctoral Associate, MIT Media Lab

Everett Lawson, MIT Media Lab

Nikhil Naik, Research Assistant, MIT Media Lab

Otkrist Gupta, Research Assistant, MIT Media Lab

Thomas Willwacher, Harvard University

Ashok Veeraraghavan, Rice University

Amy Fritz, MIT Media Lab

Chinmaya Joshi, MIT Media Lab and COE-Pune

Current Collaborators:

Diego Gutierrez, Universidad de Zaragoza

Di Wu, MIT Media Lab and Tsinghua U.

Matt O’toole, MIT Media Lab and U. of Toronto

Belen Masia, MIT Media Lab and Universidad de Zaragoza

Kavita Bala, Cornell U.

Shuang Zhao, Cornell U.

Paper

A. Velten, T. Willwacher, O. Gupta, A. Veeraraghavan, M. G. Bawendi, and R. Raskar, “Recovering ThreeDimensional Shape around a Corner using Ultra-Fast Time-of-Flight Imaging.” Nature Communications, March 2012, http://dx.doi.org/10.1038/ncomms1747, [Local Copy]

Download high resolution photos, videos, related papers and presentations

Abstract

We have built a camera that can look around corners and beyond the line of sight. The camera uses light that travels from the object to the camera indirectly, by reflecting off walls or other obstacles, to reconstruct a 3D shape.

The device has been developed by the MIT Media Lab’s Camera Culture group in collaboration with Bawendi Lab in the Department of Chemistry at MIT. An earlier prototype was built in collaboration with Prof. Joe Paradiso at MIT Media Lab and Prof. Neil Gershenfeld at the Center for Bits and Atoms at MIT. A laser pulse that lasts less than one trillionth of a second is used as a flash and the light returning from the scene is collected by a camera at the equivalent of close to 1 trillion frames per second. Because of this high speed, the camera is aware of the time it takes for the light to travel through the scene. This information is then used to reconstruct shape of objects that are visible from the position of the wall, but not from the laser or camera.

Potential applications include search and rescue planning in hazardous conditions, collision avoidance for cars, and robots in industrial environments. Transient imaging also has significant potential benefits in medical imaging that could allow endoscopes to view around obstacles inside the human body.

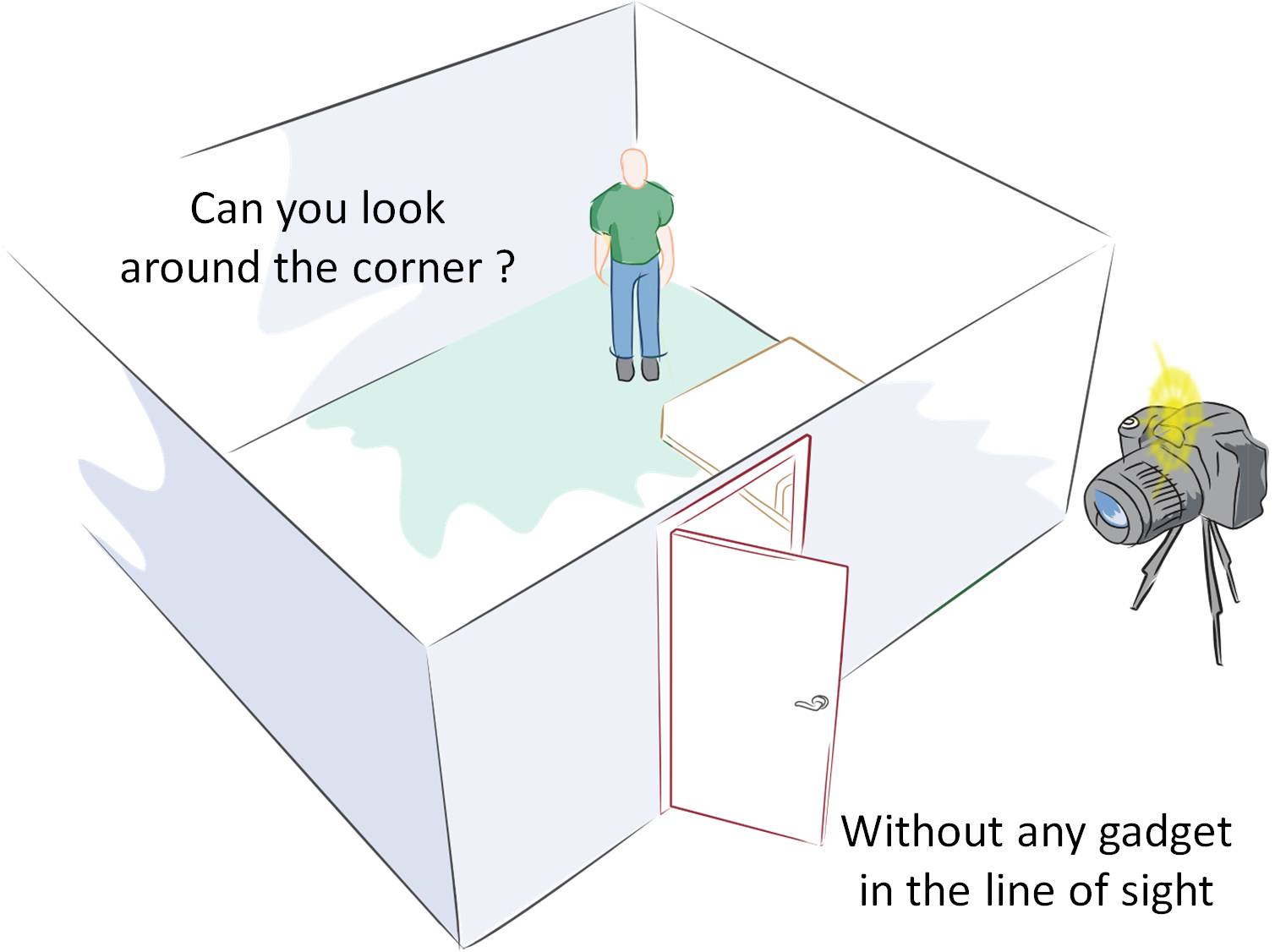

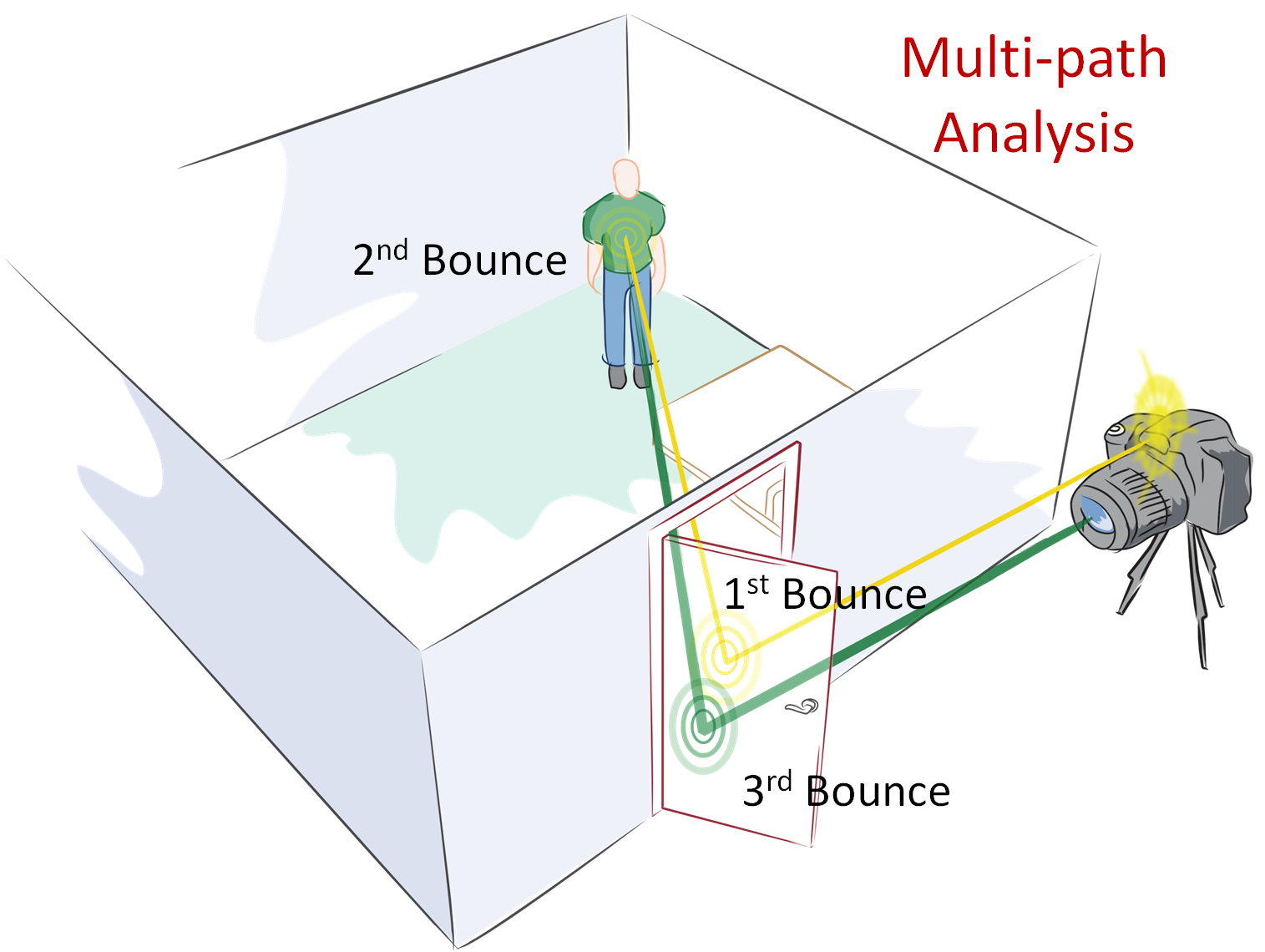

The new invention, which we call femto-photography, consists of femtosecond laser illumination, picosecond-accurate detectors and mathematical inversion techniques. By emitting short laser pulses and analyzing multi-bounce reflections we can estimate hidden geometry. In transient light transport, we account for the fact that speed of light is finite. Light travels ~1 foot/nanosecond and by sampling the light at pico-second resolution, we can estimate shapes with centimeter accuracy.

|

|

|

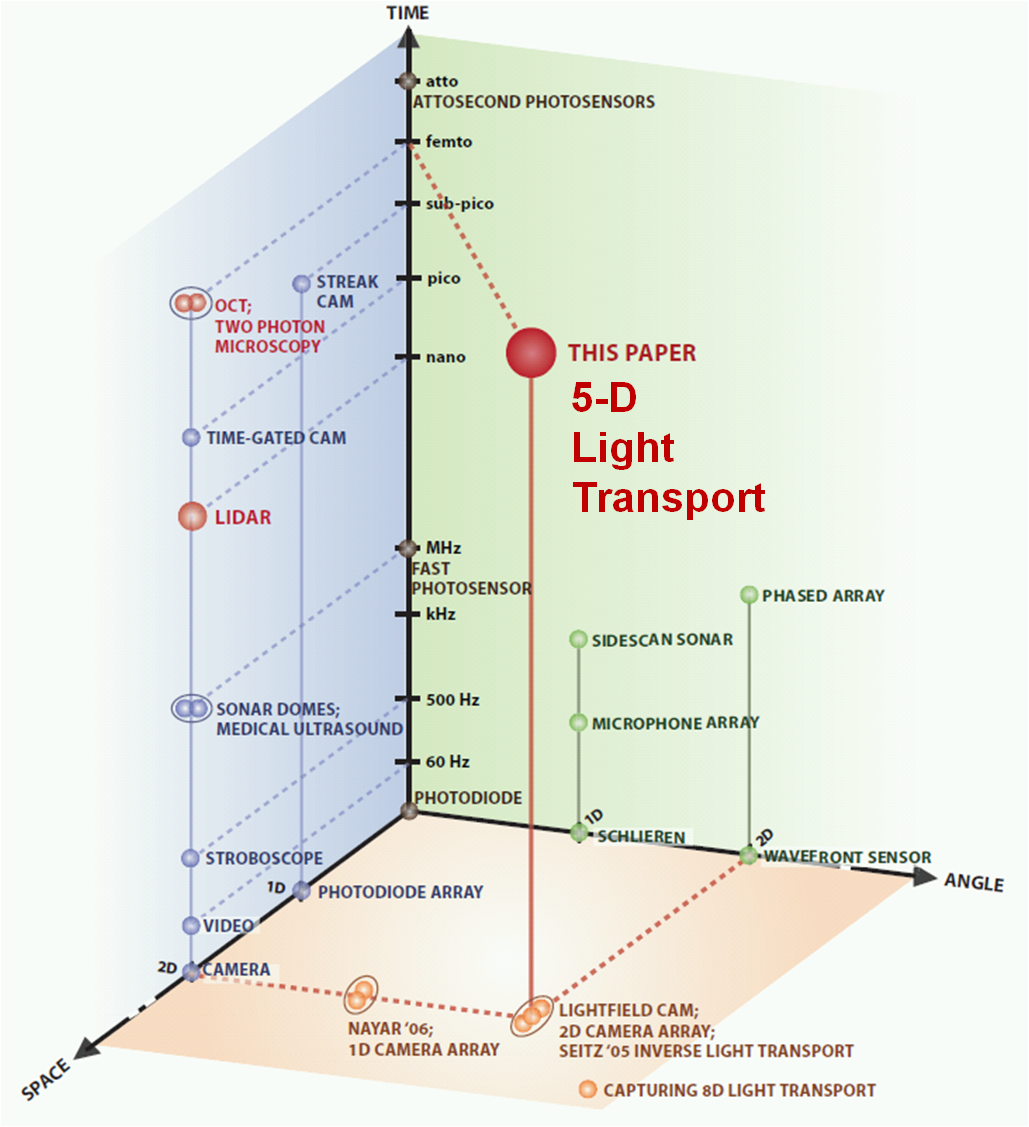

| Multi-path analysis: We show that by emitting short pulses and analyzing multi-bounce reflection from the door, we can infer hidden geometry even if the intermediate bounces are not visible. The transient imaging camera prototype consists of (a) Femtosecond laser illumination (b) Picosecond-accurate camera and (c) inversion algorithm. We measure the five dimensional Space Time Impulse Response (STIR) of the scene and reconstruct the hidden surface. | Higher Dimensional Light Transport: Popular imaging methods plotted in the Space-Angle-Time axes. With higher dimensional light capture, we expand the horizons of scene understanding. Our work uses LIDAR-like imaging hardware, but, in contrast, we exploit the multi-path information which is rejected in both LIDAR and OCT. |

Earlier Work: Time-Resolved Imaging

Overview:

“Looking Around Corners: New Opportunities in Femto-Photography”, Ramesh Raskar, ICCP Conference at CMU, Invited Talk [Video Link]

3D Shape Around a Corner:

A. Velten, T. Willwacher, O. Gupta, A. Veeraraghavan, M. G. Bawendi, and R. Raskar, “Recovering ThreeDimensional Shape around a Corner using Ultra-Fast Time-of-Flight Imaging.” Nature Communications, March 2012, http://dx.doi.org/10.1038/ncomms1747, [Local Copy]

Ultra-fast 2D Movies:

http://web.media.mit.edu/~raskar//trillionfps/

Relfectance (BRDF) from a single viewpoint:

“Single View Reflectance Capture using Multiplexed Scattering and Time-of-flight Imaging”, Nikhil Naik, Shuang Zhao, Andreas Velten, Ramesh Raskar, Kavita Bala, ACM SIGGRAPH Asia 2011 [Link]

Motion around a corner:

Rohit Pandharkar, Andreas Velten, Andrew Bardagjy, Everett Lawson, Moungi Bawendi, Ramesh Raskar: Estimating Motion and size of moving non-line-of-sight objects in cluttered environments. CVPR 2011: 265-272 [Link]

Barcode around a corner:

” Looking Around the corner using Transient Imaging”, Ahmed Kirmani, Tyler Hutchison , James Davis, Ramesh Raskar. [in ICCV 2009 Kyoto, Japan, Oral], Marr Prize Honorable Mention. [Local Copy]

Indirect depth:

Matt Hirsch and Ramesh Raskar, “Shape of Challenging Scenes using high speed ToF cameras”, May 2008

Early white paper:

R Raskar and J Davis, “5d time-light transport matrix: What can we reason about scene properties”, Mar 2008

| Video by Nature.com | Video by Paula Aguilera, LabCast MIT Media Lab |

Trillion Frames Per Second Imaging Overview of Project |

Download high resolution photos, videos, related papers and presentations

Application Scenarios

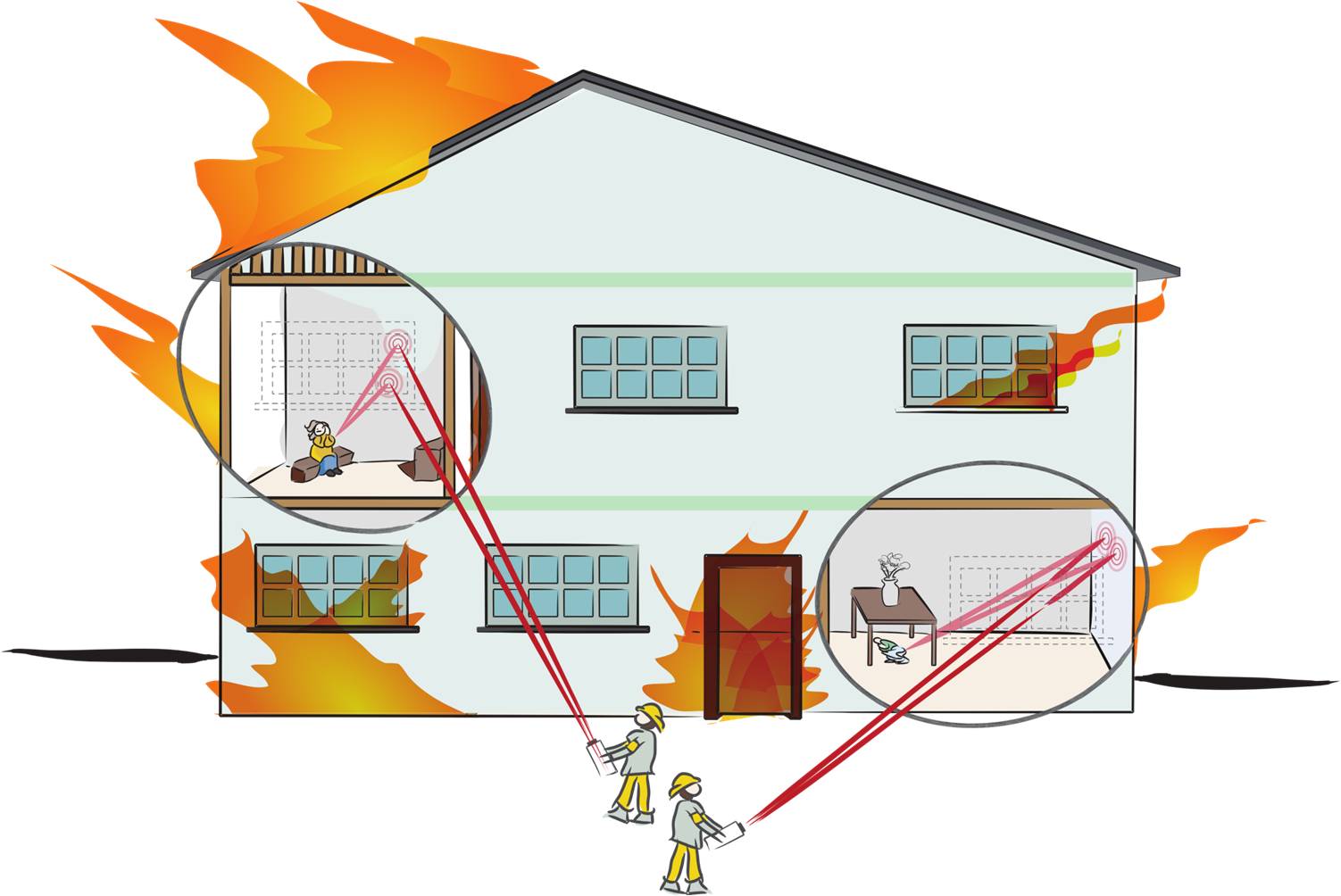

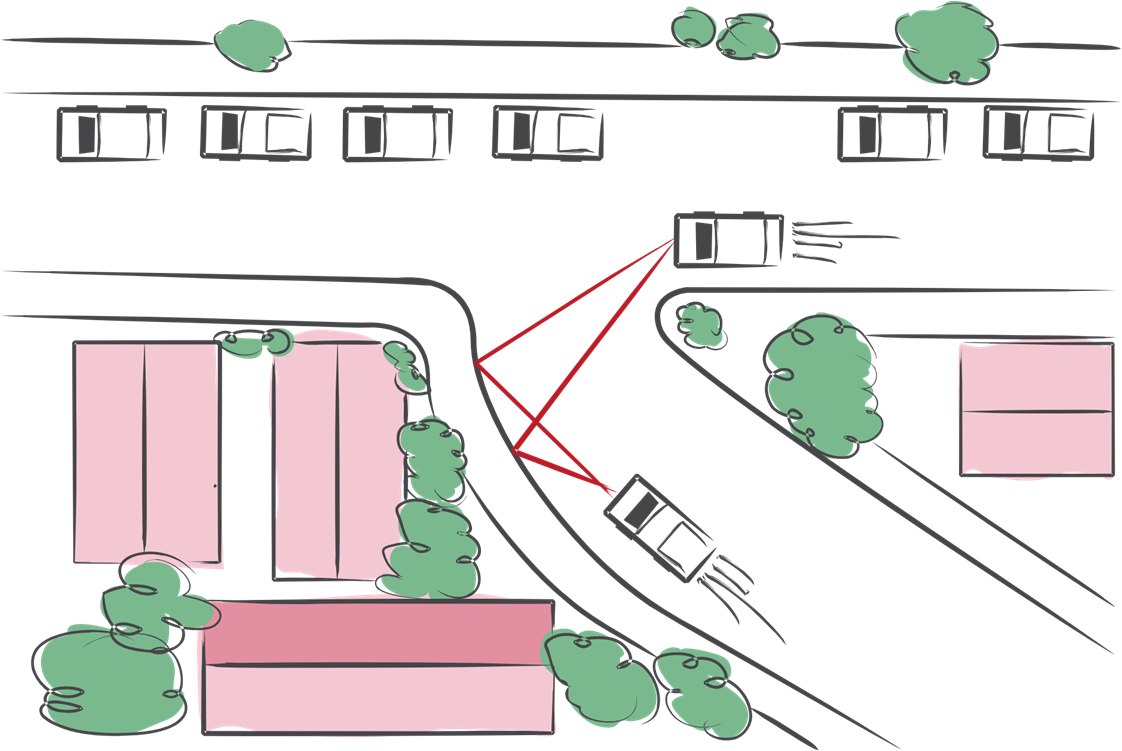

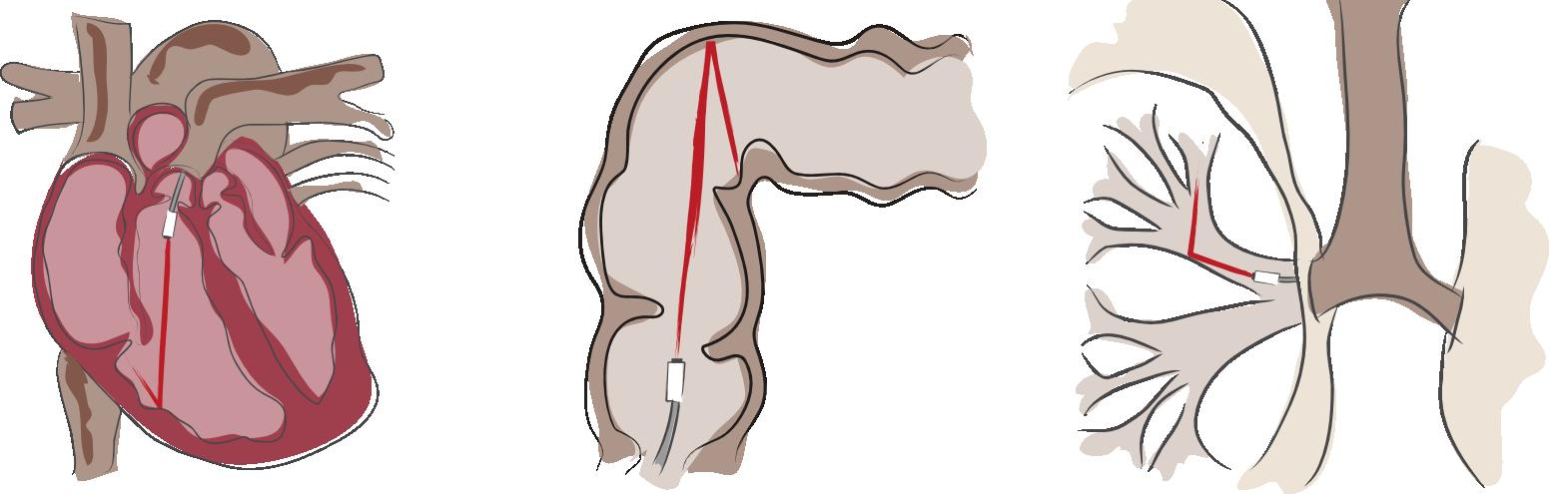

Femto-photography opens up a whole new range of applications in the areas of rescue planning in hazardous conditions such as fire, medical imaging, car navigation and robotics. Here are sketches potential application scenarios.

Locating survivors in rescue planning. |

Avoiding collisions at blind corners for cars. Avoiding collisions at blind corners for cars. |

Various endsocopy procedures where it is challenging for camera at the tip to reach tight spaces (cardioscopy, colonoscopy and bronchoscopy).

(Illustrations: Tiago Allen)

|

|

Frequently Asked Questions

How can Femto-photography see what is beyond the line of sight?

Femto-photography exploits the finite speed of light and works like an ultra-fast time of flight camera. In traditional photography, the speed of light is infinite and does not play a role. In our transient light transport framework, the finite amount of time light takes to travel from one surface to another provides useful information. The key contribution is a computational tool of transient reasoning for the inversion of light transport. The basic concept of a transient imaging camera can be understood using a simple example of a room with an open door. The goal here is to compute the geometry of the object inside the room by exploiting light reflected off the door. The user directs an ultra short laser beam onto the door and after the first bounce the beam scatters into the room. The light reflects from objects inside the room and again from the door back toward the transient imaging camera. An ultra-fast array of detectors measures the time profile of the returned signal from multiple positions on the door. We analyze this multi‐path light transport and infer shapes of objects that are in direct sight as well as beyond the line of sight. The analysis of the onsets in the time profile indicates the shape; we call this the inverse geometry problem.

How is this related to computer vision and general techniques in scene understanding?

Our goal is to exploit the finite speed of light to improve image capture and scene understanding. New theoretical analysis coupled with emerging ultra-high-speed imaging techniques can lead to a new source of computational visual perception. We are developing the theoretical foundation for sensing and reasoning using transient light transport, and experimenting with scenarios in which transient reasoning exposes scene properties that are beyond the reach of traditional computer vision.

What is a transient imaging camera?

We measure how the room responds to a very short duration laser. So transient imaging uses a transient response rather than a steady state response. Two common examples are the impulse response and the step response. In a room sized environment, the rate of arrival of photons after such an impulse provides a transient response. A traditional camera, on the other hand, uses a steady state response.

What is new about the Femto-photography approach?

Modern imaging technology captures and analyzes real world scenes using 2D camera images. These images correspond to steady state light transport which means that traditional computer vision ignores the light multipath due to time delay in propagation of light through the scene. Each ray of light takes a distinct path through the scene which contains a plethora of information which is lost when all the light rays are summed up at the traditional camera pixel. Light travels very fast (~1 foot in 1 nano sec) and sampling light at these time scales is werasll beyond the reach of conventional sensors (the fastest video cameras have microsecond exposures). On the other hand, Femtosecond imaging techniques such as optical coherence tomography which do employ ultra-fast sensing and laser illumination cannot be used beyond millimeter sized biological samples. Moreover all of imaging systems to date are line of sight. We propose to combine the recent advances in ultra-fast light sensing and illumination with a novel theoretical framework to exploit the information contained in light multipath to solve impossible problems in real world scenes such as looking around corners and material sensing.

How can one take a photo of photons in motion at a trillion frames per second?

We use a pico-second accurate detector (single pixel). Another option is a special camera called a streak camera that behaves like an oscilloscope with corresponding trigger and deflection of beams. A light pulse enters the instrument through a narrow slit along one direction. It is then deflected in the perpendicular direction so that photons that arrive first hit the detector at a different position compared to photons that arrive later. The resulting image forms a “streak” of light. Streak tubes are often used in chemistry or biology to observe milimeter sized objects but rarely for free space imaging. See recent movies of photons in motion captured by our group at [Video]

What are the challenges?

The number of possible light multipath grows exponentially in the number of scene points. There exists no prior theory which models time delayed light propagation which makes the modeling aspect a very hard theoretical and computationally intense problem. Moreover, we intend to develop a practical imaging device using this theory and need to factor in real world limitations such as sensor bandwidth, SNR, new noise models etc. Building safe, portable, free-space functioning device using highly experimental optics such as Femtosecond lasers and sensitive picoseconds cameras is extremely challenging and would require pushing modern photonics and optics technology to its limits, creating new hardware challenges and opportunities. The current resolution of the reconstructed data is low, but it is sufficient to recognize shapes. But with higher time and space resolution, the quality will improve significantly.

How can endoscopes see beyond the line of sight?

Consider the constraints on diagnostic endoscopy. Great progress in imaging hardware has allowed a gradual shift from rigid to flexible to digital endoscopes. Digital scopes put image sensors directly at the tip of the scopes. However, there is a natural limit to their reach due to constraints in the dimensions of human body that leave very little room for guiding the imager assemblies. Making imagers smaller is challenging due to the diffraction limits posed on the optics as well as due to sensor‐noise limits on the sensor pixel size. In many scenarios, we want to avoid the maze of cavities and serial traversal for examination. We want the precise location and size of a lesion when deciding for or against application of limited or extended surgical procedures. Ideally we should be to explore multitude of paths in a simultaneous and parallel fashion. We use transient imaging to mathematically invert the data available in light reflected in complex optical reflections. We can convert elegant optical and mathematical insights into unique medical tools.

How will these complicated instruments transition out of the lab?

The ultrafast imaging devices today are quite bulky. The laser sources and high speed cameras fit on a small optical bench and need to be carefully calibrated for triggering. However, there is a parallel research in femtosecond solid state lasers and they will greatly simplify the illumination source. Pico-second accurate single pixel detectors are now available for under $100. Building an array of such pixels is non-trivial but comparable to thermal-IR cameras. Nevertheless, in the short run, we are building applications where portability is not as critical. For endoscopes, the imaging and illumination can be achieved via coupled fibers.

Related Work

P Sen, B Chen, G Garg, S Marschner, M Horowitz, M Levoy, and H Lensch, “Dual photography”, in ACM SIG. ’05

S M Seitz, Y Matsushita, and K N Kutulakos, “A theory of inverse light transport”, in ICCV ’05

S K Nayar, G Krishnan, M Grossberg, and R Raskar, “Fast separation of direct and global components of a scene using high frequency illumination”, in SIGGRAPH ’06

K Kutulakos and E Steger, “A theory of refractive and specular 3d shape by light-path triangulation”, IJCV ’07.

B. Atcheson, I. Ihrke, W. Heidrich, A. Tevs, D. Bradley, M. Magnor, H.-P. Seidel, “Time-resolved 3D Capture of Non-stationary Gas Flows” Siggraph Asia, 2008

Presentation, Videos and

Media Lab 25th Year Anniversary Celebrations, October 2010 [PPT] [Video]

ICCP Conference at CMU, Invited Talk [Video Link]

Trillion Frames Per Second Camera to Visualize Photons in Motion [Video Link]

More recent work on Visualizing Light in Motion at Trillion Frames Per Second

News Stories

Nature.com News: http://www.nature.com/news/how-to-see-around-corners-1.10258

MIT News:

Download high resolution photos, videos, related papers and presentations

Acknowledgements

We thank James Davis, UC Santa Cruz, who along with Ramesh Raskar, did the foundational work in analyzing time-images in 2007-2008. We are very thankful to Neil Gershenfeld, Joseph Paradiso, Franco Wong, Manu Prakash, George Verghese, Franz Kaertner and Rajeev Ram for providing facilities and guidance. Several MIT undergraduate students: George Hansel, Kesavan Yogeswaran, Biyeun Buczyk assisted in carrying out initial experiments. We thank the entire Camera Culture group for their unrelenting support. We thank Gavin Miller, Adam Smith and James Skorupski for several initial discussions.

This research is supported by research grants from MIT Media Lab sponsors, MIT Lincoln Labs and the Army Research Office through the Institute for Soldier Nanotechnologies at MIT. Ramesh Raskar is supported by an Alfred P. Sloan Research Fellowship 2009 and DARPA Young Faculty award 2010.

Recent projects in Camera Culture group

|

|

|

|

|

|

| Computational Photography |

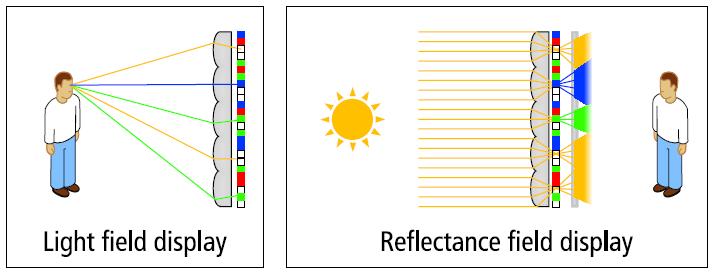

6D Display Lighting and Viewpt aware displays |

Bokode Long Distance Barcodes |

BiDi Screen Touch+3D Hover on Thin LCD |

NETRA Cellphone based Eye Test |

GlassesFree 3DHDTV |