| Kshitij Marwah | Gordon Wetzstein | Yosuke Bando | Ramesh Raskar |

| MIT Media Lab – Camera Culture Group | |||

SIGGRAPH 2013. ACM Transactions on Graphics 32(4).

Abstract

Light field photography has gained a significant research interest in the last two decades; today, commercial light field cameras are widely available. Nevertheless, most existing acquisition approaches either multiplex a low-resolution light field into a single 2D sensor image or require multiple photographs to be taken for acquiring a high-resolution light field. We propose a compressive light field camera architecture that allows for higher-resolution light fields to be recovered than previously possible from a single image. The proposed architecture comprises three key components: light field atoms as a sparse representation of natural light fields, an optical design that allows for capturing optimized 2D light field projections, and robust sparse reconstruction methods to recover a 4D light field from a single coded 2D projection. In addition, we demonstrate a variety of other applications for light field atoms and sparse coding techniques, including 4D light field compression and denoising.

Files

- Paper [pdf]

- Video [mov]

- Supplemental material [pdf]

- Presentation slides [pptx]

- Source Code and Datasets [zip]

Citation

K. Marwah, G. Wetzstein, Y. Bando, R. Raskar. Compressive Light Field Photography using Overcomplete Dictionaries and Optimized Projections. Proc. of SIGGRAPH 2013 (ACM Transactions on Graphics 32, 4), 2013.

BibTeX

@article{Marwah:2013:CompressiveLightFieldPhotography,

author = {K. Marwah and G. Wetzstein and Y. Bando and R. Raskar},

title = {{Compressive Light Field Photography using Overcomplete Dictionaries and Optimized Projections}},

journal = {ACM Trans. Graph. (Proc. SIGGRAPH)},

volume = {32},

number = {4},

year = {2013},

publisher = {ACM},

pages = {1--11},

address = {New York, NY, USA}

}

In the News

“FOCII: Light Field Cameras for Everybody” (more info on Kshitij’s homepage)

Convert DSLR Into a Light Field Camera from Kshitij Marwah on Vimeo.

Additional Information

|

|

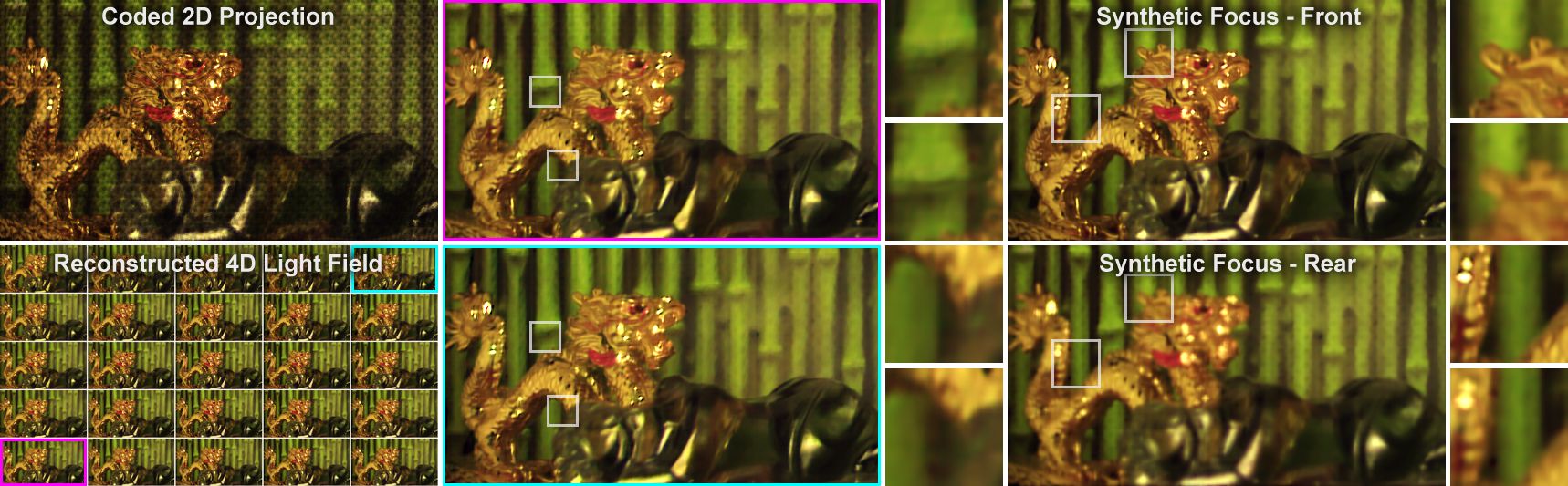

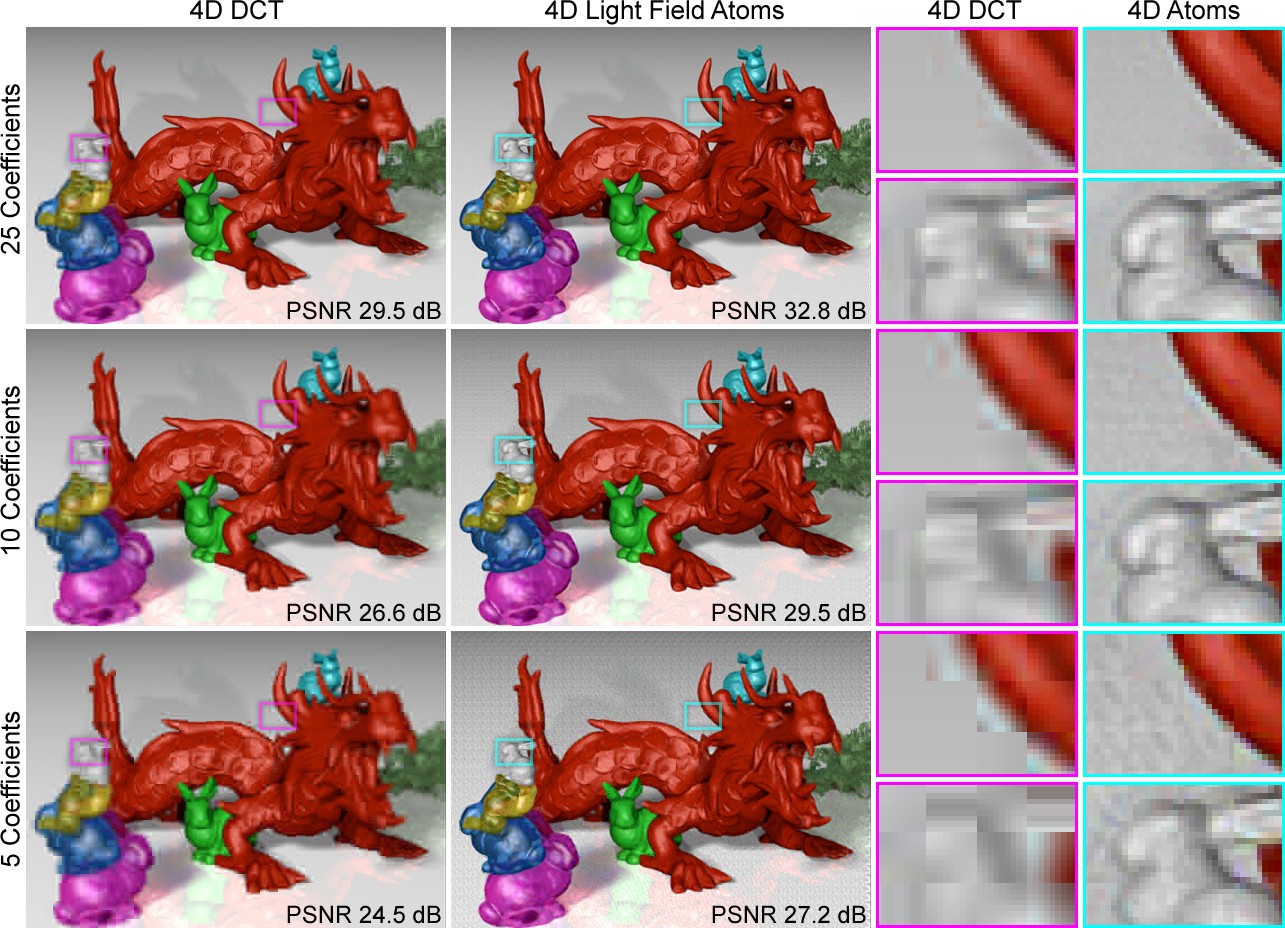

| Figure 2: Compressibility of a 4D light field in various high-dimensional bases. As compared to popular basis representations, the proposed light field atoms provide better compression quality for natural light fields (bottom left). Edges and junctions are faithfully captured (right); for the purpose of 4D light field reconstruction from a single coded 2D projection, the proposed dictionaries combined with sparse coding techniques perform best in this experiment (right).Image credit: MIT Media Lab, Camera Culture Group |

|

|

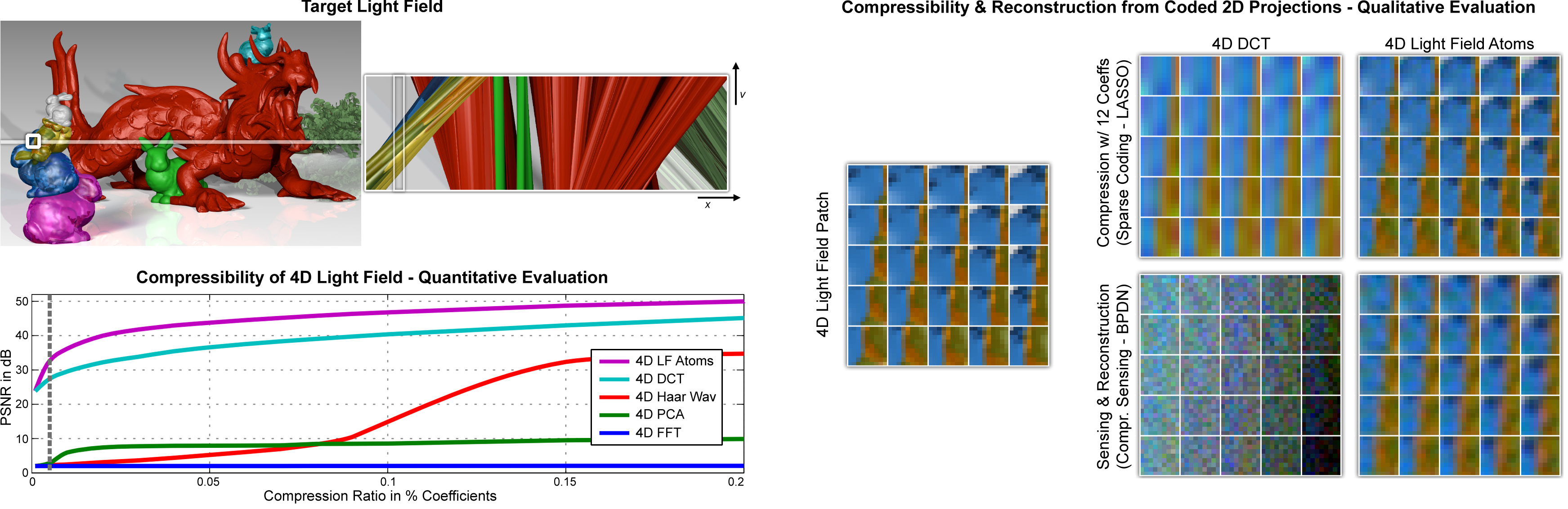

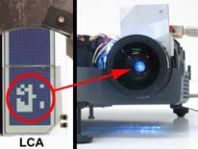

| Figure 3: Prototype light field camera. We implement an optical relay system that emulates a spatial light modulator (SLM) being mounted at a slight offset in front of the sensor (right inset). We employ a reflective LCoS as the SLM (lower left insets).Image credit: MIT Media Lab, Camera Culture Group |

|

|

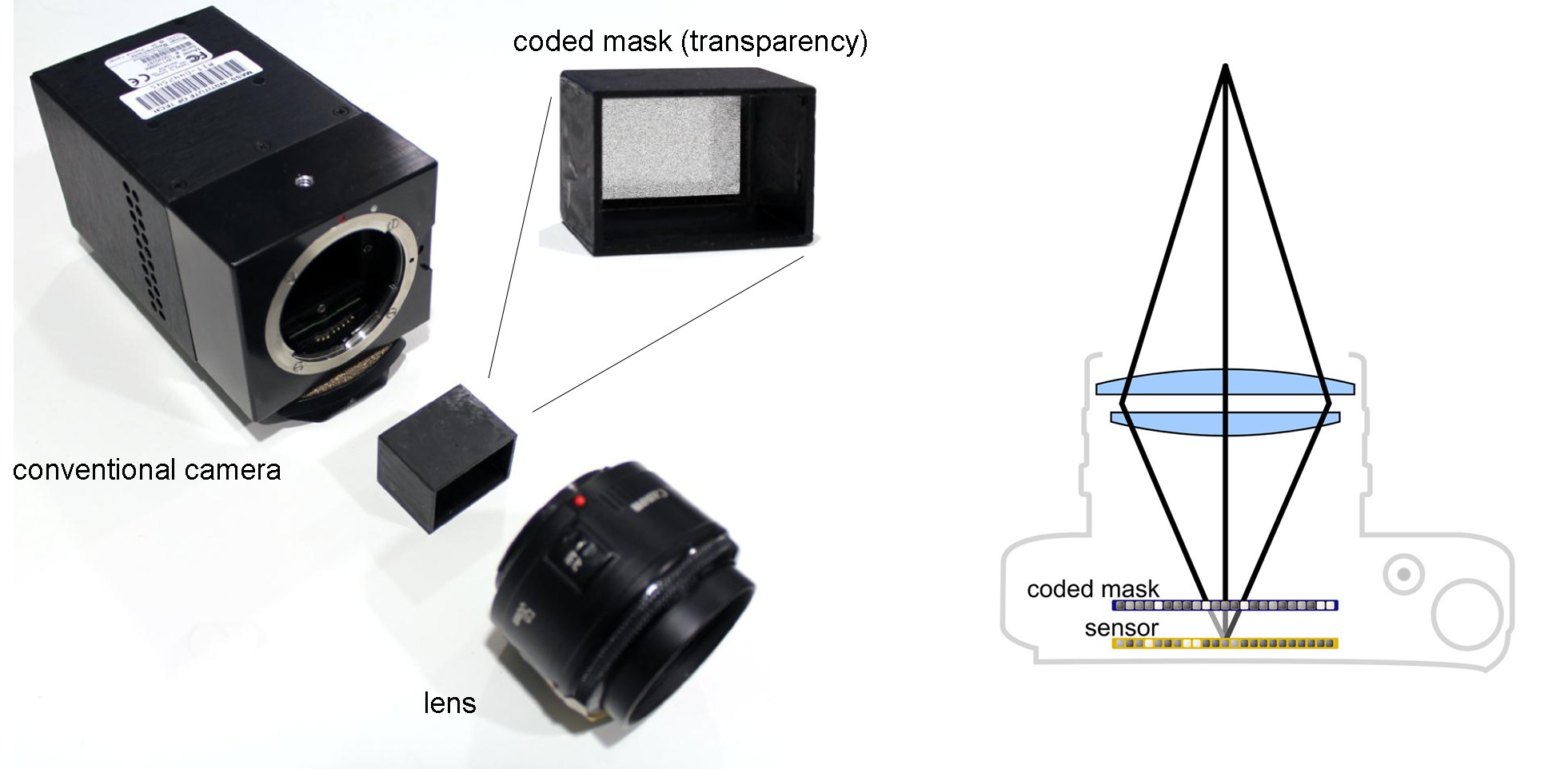

| Figure 4: Second prototype light field camera. A conventional camera can also be retrofitted with a simple transparency mask to facilitate compressive light field photography (left). As shown on the right, the mask blocks some of the light rays inside the camera and therefore codes the captured image. This optical design combined with compressive computational processing could be easily integrated into consumer products.Image credit: MIT Media Lab, Camera Culture Group |

|

|

| Figure 5: Visualization of light field atoms captured in an overcomple dictionary. Light field atoms are the essential building blocks of natural light fields – most light fields can be represented by the weighted sum of very few atoms. We show that light field atoms are crucial for robust light field reconstruction from coded projections and useful for many other applications, such as 4D light field compression and denoising.Image credit: MIT Media Lab, Camera Culture Group |

|

|

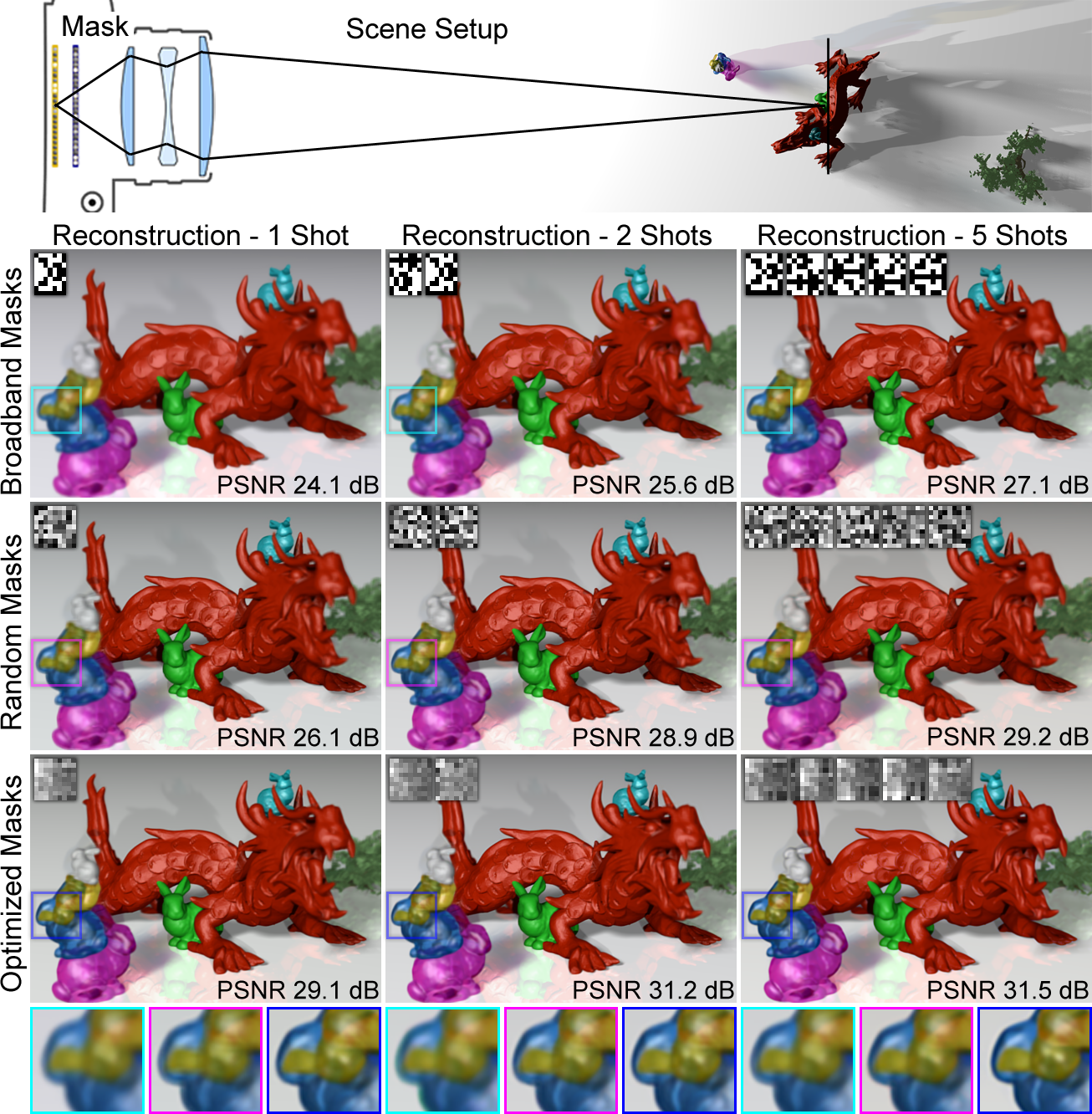

| Figure 6: Evaluating optical modulation codes and multiple shot acquisition. We simulate light field reconstructions from coded projections for one, two, and five captured camera images. One tile of the corresponding mask patterns is shown in the insets. For all optical codes, an increasing number of shots increases the number of measurements, hence reconstruction quality. Nevertheless, optimized mask patterns facilitate single-shot reconstructions with a quality that other patterns can only achieve with multiple shots.Image credit: MIT Media Lab, Camera Culture Group |

|

|

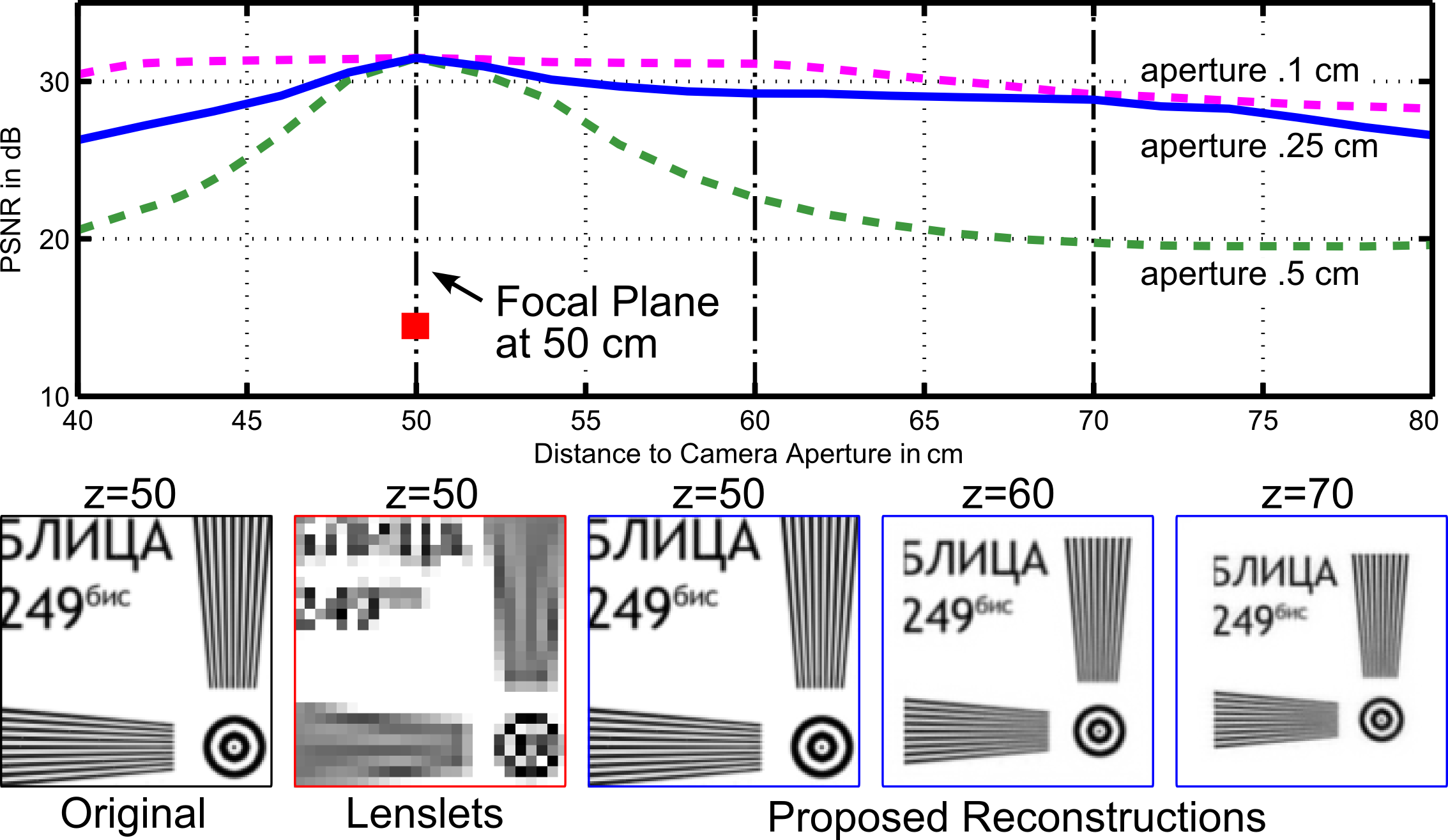

| Figure 7: Evaluating depth of field. As opposed to lenslet arrays, the proposed approach preserves most of the image resolution at the focal plane. Reconstruction quality, however, decreases with distance to the focal plane. Central views are shown (on focal plane) for full-resolution light field, lenslet acquisition, and compressive reconstruction; compressive reconstructions are also shown for two other distances. The three plots evaluate reconstruction quality for varying aperture diameters with a dictionary learned from data corresponding to the blue plot (aperture diameter 0.25 cm).Image credit: MIT Media Lab, Camera Culture Group |

|

|

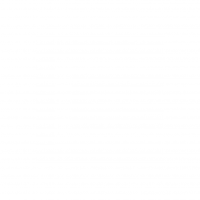

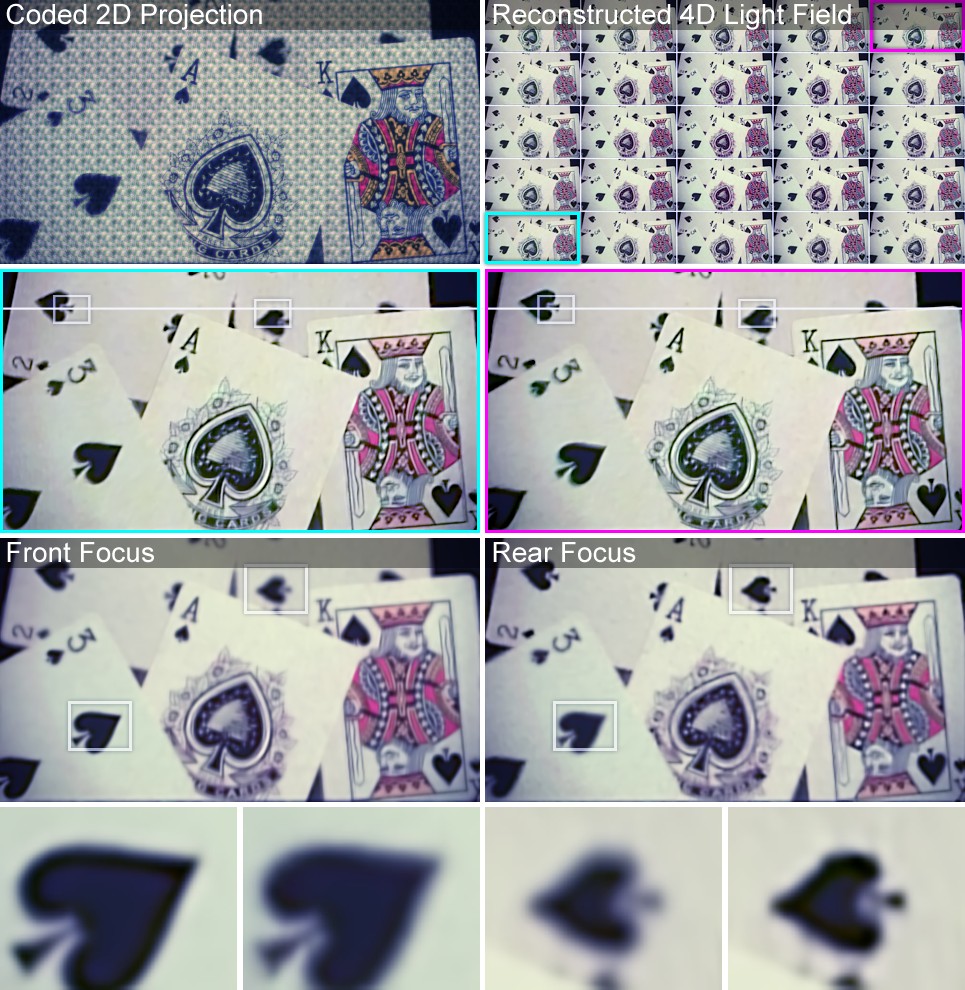

| Figure 8: Light field reconstruction from a single coded 2D projection. The scene is composed of diffuse objects at different depths; processing the 4D light field allows for post-capture refocus.Image credit: MIT Media Lab, Camera Culture Group |

|

|

| Figure 9: Light field reconstructions of an animated scene. We capture a coded sensor image for multiple frames of a rotating carousel (left) and reconstruct 4D light fields for each of them. The techniques explored in this paper allow for higher-resolution light field acquisition than previous single-shot approaches.Image credit: MIT Media Lab, Camera Culture Group |

|

|

| Figure 10: Application: light field compression. A light field is divided into small 4D patches and represented by only few coefficients. Light field atoms achieve a higher image quality than DCT coefficients.Image credit: MIT Media Lab, Camera Culture Group |

|

|

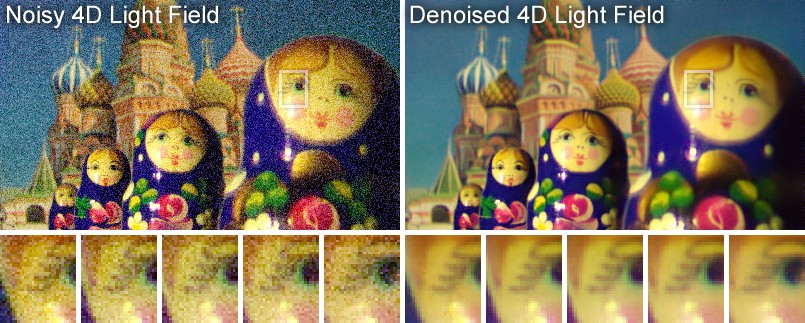

| Figure 11: Application: light field denoising. Sparse coding and the proposed 4D dictionaries can remove noise from 4D light fields.Image credit: MIT Media Lab, Camera Culture Group |

Contact

Technical Details

| Gordon Wetzstein, PhD MIT Media Lab gordonw (at) media.mit.edu |

Kshitij Marwah MIT Media Lab ksm (at) media.mit.edu |

Press

Alexandra Kahn, Senior Press Liaison, MIT Media Lab

akahn (at) media.mit.edu or 617/253.0365

Acknowledgements

We thank the reviewers for valuable feedback and the following people for insightful discussions and support: Ashok Veeraraghavan, Rahul Budhiraja, Kaushik Mitra, Matthew O’Toole, Austin Lee, Sunny Jolly, Ivo Ihrke, Wolfgang Heidrich, Guillermo Sapiro, and Silicon Micro Display. Gordon Wetzstein was supported by an NSERC Postdoctoral Fellowship and the DARPA SCENICC program. Ramesh Raskar was supported by an Alfred P. Sloan Research Fellowship and a DARPA Young Faculty Award. We recognize the support of Samsung and NSF through grants 1116452 and 1218411.

Related Projects

|

|

|

|

|

| Coded Aperture Projection (TOG ’10, ICCP ’13) |

Layered 3D (SIG ’11) |

Polarization Fields (SIG Asia ’11) |

Tensor Displays (SIG ’12) |

Adaptive Image Synthesis (SIG ’13) |