Gestural Interaction for Embedded Electronics in Ubiquitous Computing

Alex OlwalAndy Bardagjy Jan Zizka Ramesh Raskar  SpeckleEye describes the design and implementation of an embedded gesture and real-time motion tracking system using laser speckle. SpeckleEye is a low-cost, scalable, open source toolkit for embedded speckle sensing and gestural interaction with ubiquitous devices in the environment. We describe embedded speckle sensing hardware and firmware, a cross-platform gesture recognition library optimized to run on embedded processors, and a set of prototypes that illustrate the flexibility of our platform.

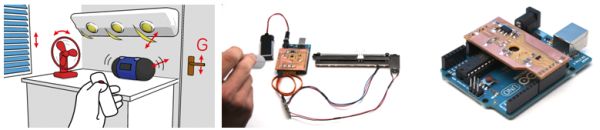

SpeckleEye describes the design and implementation of an embedded gesture and real-time motion tracking system using laser speckle. SpeckleEye is a low-cost, scalable, open source toolkit for embedded speckle sensing and gestural interaction with ubiquitous devices in the environment. We describe embedded speckle sensing hardware and firmware, a cross-platform gesture recognition library optimized to run on embedded processors, and a set of prototypes that illustrate the flexibility of our platform.

SpeckleEye Arduino Shield Software libraries + hardware schematics

Contact us if you are interested in getting a board! |

|

CHI 2012 Extended Abstracts

Olwal, A., Bardagjy, A., Zizka, J., and Raskar, R. SpeckleEye: Gestural Interaction for Embedded Electronics in Ubiquitous Computing. To appear in Extended abstracts of CHI 2012 (SIGCHI Conference on Human Factors in Computing Systems), Austin, TX, May 5-10, 2012.

SpeckleSense Fast, Precise, Low-cost and Compact Motion Sensing using Laser Speckle

Jan Zizka Alex Olwal Ramesh Raskar

SpeckleSense introduces a novel set of motion-sensing configurations based on laser speckle sensing that are particularly suitable for human-computer interaction. SpeckleSense is a technique for optical motion sensing with the following properties:

SpeckleSense introduces a novel set of motion-sensing configurations based on laser speckle sensing that are particularly suitable for human-computer interaction. SpeckleSense is a technique for optical motion sensing with the following properties:

- fast (10 000 fps)

- precise (50 μm)

- lensless → tiny (1 × 2 mm)

- low power

- needs minimal computation → minimal latency

In our UIST 2011 paper, we provide an overview and design guidelines for laser speckle sensing for user interaction and introduce four general speckle projector/sensor configurations. We describe a set of prototypes and applications that demonstrate the versatility of these laser speckle sensing techniques.

SpeckleSense video (4:49)

UIST 2011 paper

Zizka, J., Olwal, A., and Raskar, R. SpeckleSense: Fast, Precise, Low-cost and Compact Motion Sensing using Laser Speckle. Proceedings of UIST 2011 (ACM Symposium on User Interface Software and Technology), Santa Barbara, CA, Oct 16-19, 2011, pp. 489-498.

Raw output from “slow” 300 fps camera (0:33)

SpeckleSense exploits the laser speckle phenomena to track small motion using fast and efficient image processing. This video illustrates how tiny movements result in dramatic changes for the speckle sensor (a 300 fps camera). To allow fast real-time interaction, we use dedicated optical sensors that are rated at thousands of fps.

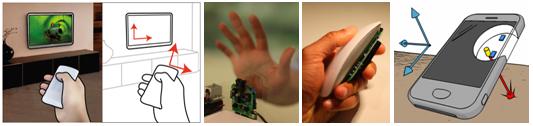

TouchController = Spatial Motion + Multi-touch (0:33)

The TouchController is a hybrid input device where we combine our SpeckleSense technique with a multi-touch mouse. This allows the user to manipulate on-screen content using spatial motion, while also being able to use multi-touch gestures on its surface for rotation and zooming.

Mobile Medical Viewer with 3D Motion (0:34)

Demonstrates how SpeckleSense is used to track a mobile device in 3D above a surface. The user can pan around in a larger image by moving the device in the plane above the surface, but also view different slices in the body by varying the distance to surface.

Motion input for public displays (0:16)

We enable natural interaction in public displays by using an integrated speckle sensor and laser behind a glass window. The user’s hand reflects speckles that are captured by the speckle sensor. This video shows how a user controls shown images in an image viewer by moving the hand.